Mobile

In 2015, Shopify began a journey to put mobile-first. Today our mobile apps, powered by next generation APIs, offer the same seamless experience as our web apps.

Tophat: Crafting a Delightful Mobile Developer Experience

React Native Skia: A Year in Review and a Look Ahead

With the latest advances in the React Native architecture, allowing direct communication between the JavaScript and native sides, we saw an opportunity to provide an integration for Skia, arguably the most versatile 2D graphic engine. We wondered: How should these two pieces of technology play together? Last December, we published the first alpha release of React Native Skia and eighty nine more releases over the past twelve months. We went from offering a model that decently fit React Native and Skia together to a fully-tailored declarative architecture that’s highly performant. We’re going on what kept Christian Falch, Colin Gray, and I busy and a look at what's ahead for the library.

One Renderer, Three Platforms (and Counting... )

React Native Skia relies on a custom React Renderer to express Skia drawings declaratively. This allows us to use the same renderer on iOS and Android, the Web, and even Node.js. Because this renderer has no coupling with DOM nor Native API, it provides a straightforward path for the library to be integrated onto new platforms where React is available and provided that the Skia host API is available as well.

On React Native, the Skia host API is available via the JavaScript Interface (JSI), exposing the C++ Skia API to JavaScript. On the Web, the Skia API is available via CanvasKit, a WebAssembly build of Skia. We liked the CanvasKit API from the get-go: the Skia team did a great job of conciseness and completeness with this API. It is also based on the Flutter drawing API, showing great relevance to our use-cases. We immediately decided to make our host API fully compatible with it. An interesting side-effect of this compatibility is that we could use our renderer on the Web immediately; in fact, the graphic motions we built for the original project announcement were written using React Native Skia itself via Remotion, a tool to make videos in React.

After the first release we received a great response from the community and we had at heart to ship the library to as many people as possible. The main tool to have Web like development and release agility for React Native is Expo. We coordinated with the team at Expo to have the library working out of the box with Expo dev clients and integrate it as part of the Expo GO client. Part of this integration with Expo, it was important to ship full React Native Web support.

On each platform, different GPU APIs are available. We integrated with Metal on iOS, and OpenGL on Android and the Web. Finally, we found our original declarative API to be quite productive; it closely follows the Skia imperative API and augments it with a couple of sensible concepts. We added a paint (an object describing the colors and effects applied to a drawing) to the original Skia drawing context to enable cascading effects such as opacity and some utilities that would feel familiar to React Native developers. The React Native transform syntax can be used directly instead of matrices, and images can be resized in a way that should also feel familiar.

The Road to UI Thread Rendering

While the original alpha release was able to run some compelling demos, we quickly identified two significant bottlenecks:

- Using the JavaScript thread. Originally we only ran parts of the drawings on the JS thread to collect the Skia drawing commands to be executed on another thread. But this dependency on the JS thread was preventing us from displaying the first canvas frame as fast as possible. In scenarios where you have a screen transition displaying many elements, including many Skia canvases, locking the JavaScript thread for each canvas creates a short delay that’s noticeable on low-end devices.

- Too many JSI allocations. We quickly came up with use cases where a drawing would contain a couple of thousand components. This means thousands of JSI object allocations and invocations. At this scale, the slight overhead of using JSI ( instead of using C++ directly) adds up to something severely noticeable.

Based on this analysis, it was clear that we needed a model to

- execute drawings entirely on the UI thread

- not rely on JSI for executing the drawing.

That led us to design an API called Skia DOM. While we couldn't come up with a cool name for it, what's inside is super cool.

The Skia DOM API allows us to express any Skia drawing declaratively. Skia DOM is platform agnostic. In fact, we use a C++ implementation of that API on iOS and Android and a JS implementation on React Native Web. This API is also framework agnostic. It doesn’t rely on concepts from React, making it quite versatile, especially for animations.

Behind the scenes, Skia DOM keeps a source of truth of the drawing. Any change to the drawing recomputes the necessary parts of the drawing state and only these necessary parts, allowing for incredible performance levels.

- The React reconciler builds the SkiaDOM, a declarative representation of a Skia drawing via JSI.

- Because the SkiaDOM has no dependencies with the JavaScript thread, it can always draw on the UI thread and the first time to frame is very fast.

- Another benefit of the SkiaDOM API is that it only computes things once. It can receive updates from the JS or animation thread. An update will recompute all the necessary parts of a drawing.

- The Skia API is directly available via a thin JSI layer. This is useful to build objects such as paths or shaders.

Interestingly enough, when we started with this project, we took a lot of inspiration from existing projects in the Skia ecosystem such as CanvasKit. With Skia DOM, we have created a declarative model for Skia drawing that can be extremely useful for projects outside the React ecosystem.

The Magic Of Open Source

For React Native Skia to be a healthy open-source project, we focused on extensibility and quality assurance. React Native Skia provides extension points allowing developers to build their own libraries on top of it. And the community is already taking advantage of it. Two projects, in particular, have caught our attention.

The first one extends React Native Skia with the Skottie module. Skottie is a Lottie player implemented in Skia. While we don’t ship the Skottie module part of React Native Skia, we made sure that library developers can use our C++ API to extend it with any module they wish. That means we can keep the size of the core library small while letting developers opt-in for the extra modules they wish to use.

Of all our great open-source partners, none has taken the library on such a crazy ride as the Margelo agency did. The React Native Vision Camera is a project that allows React Native developers to write JavaScript plugins to process camera frames on the UI frame. The team has worked hard to enable Skia image filters and effects to be applied in real time onto camera frames.

React Native Skia is written in TypeScript and C++. As part of the project quality assurance, we heavily rely on static code analysis for both languages. We also built an end-to-end test suite that draws each example on iOS, Android, and Web. Then we check that the drawings are correct and identical on each platform. We can also use it to test for Skia code executions where the result is not necessarily a drawing but can be a Skia object such as a path for instance. By comparing results across platforms, we gained tons of insights on Skia (for instance, we realized how each platform displays fonts slightly differently). And while the idea of building reliable end-to-end testing in React Native can seem daunting, we worked our way up (by starting from node testing only and then incrementally adding iOS and Android) to a test environment that is really fun and has substantially helped improve the quality of the library.

We also worked on documentation. Docusaurus appears to be the gold standard for documenting open-source project and it hasn’t disappointed. Thanks to Shiki Twoslash, we could add TypeScript compiler checking to our documentation examples. Allowing us to statically check that all of our documentation examples compile against the current version, and that the example results are part of the test suite.

A Look Ahead to 2023

Now that we have a robust model for UI thread rendering with Skia DOM, we’re looking to use it for animations. This means butter smooth animation even when the JavaScript thread is not available. We have already prototyped Skia animations via JavaScript worklets and we are looking to deliver this feature to the community. For animations, we are also looking at UI-thread-level integration between Skia and Reanimated. As mentioned in a Reanimated/Skia tutorial, we believe that a deep integration between these two libraries is key.

We’re also looking to provide advanced text layouts using the Skia paragraph module. Advanced text layouts will enable a new range of use cases such as advanced text animations which are currently not available in React Native as well as having complex text layouts available alongside existing Skia drawings.

Can Skia take your React Native App to the next level in 2023? Let us know your thoughts on the project discussion board, and until then: Happy Hacking!

William Candillon is the host of the “Can it be done in React Native?” YouTube series, where he explores advanced user-experiences and animations in the perspective of React Native development. While working on this series, William partnered with Christian to build the next-generation of React Native UIs using Skia.

We all get shit done, ship fast, and learn. We operate on low process and high trust, and trade on impact. You have to care deeply about what you’re doing, and commit to continuously developing your craft, to keep pace here. If you’re seeking hypergrowth, can solve complex problems, and can thrive on change (and a bit of chaos), you’ve found the right place. Visit our Engineering careers page to find your role.

Migrating our Largest Mobile App to React Native

Lessons From Building Android Widgets

By Matt Bowen, James Lockhart, Cecilia Hunka, and Carlos Pereira

When the new widget announcement was made for iOS 14, our iOS team went right to work designing an experience to leverage the new platform. However, widgets aren’t new to Android and have been around for over a decade. Shopify cares deeply about its mobile experience and for as long as we’ve had the Shopify mobile app, both our Android and iOS teams ship every feature one-to-one in unison. With the spotlight now on iOS 14, this was a perfect time to revisit our offering on Android.

Since our contribution was the same across both platforms, just like our iOS counterparts at the time, we knew merchants were using our widgets, but they needed more.

Table of Contents

Why Widgets are Important to Shopify

Our widgets mainly focus on analytics that help merchants understand how they’re doing and gain insights to make better decisions quickly about their business. Monitoring metrics is a daily activity for a lot of our merchants, and on mobile, we have the opportunity to give merchants a faster way to access this data through widgets. They provide merchants a unique avenue to quickly get a pulse on their shops that isn’t available on the web.

After gathering feedback and continuously looking for opportunities to enhance our widget capabilities, we’re at our third iteration, and we’ll share with you the challenges we faced and how we solved them.

Why We Didn’t Use React Native

A couple of years ago Shopify decided to go full on React Native. New development should be done in React Native, and we’re also migrating some apps to the technology. This includes the flagship admin app, which is the companion app to the widgets.

Then why not write the widgets in React Native?

After doing some initial investigation, we quickly hit some roadblocks (like the fact that RemoteViews are the only way to create widgets). There’s currently no official support in the React Native community for RemoteViews, which is needed to support widgets. This felt very akin to a square peg in a round hole. Our iOS app also ran into issues supporting React Native, and we were running down the same path. Shopify believes in using the right tool for the job, we believe that native development was the right call in this case.

Building the Widgets

When building out our architecture for widgets, we wanted to create a consistent experience on both Android and iOS while preserving platform idioms where it made sense. In the sections below, we want to give you a view of our experiences building widgets. Pointing out some of the more difficult challenges we faced. Our aim is to shed some light around these less used surfaces, hopefully give some inspiration, and save time when it comes to implementing widgets in your applications.

Fetching Data

Some types of widgets have data that change less frequently (for example, reminders) and some that can be forecasted for the entire day (for example, calendar and weather). In our case, the merchants need up-to-date metrics about their business, so we need to show data as fresh as possible. Delays in data can cause confusion, or even worse, delay information that could change an action. Say you follow the stock market, you expect the stock app and widget data to be as up to date as possible. If the data is multiple hours stale, you may have missed something important! For our widgets to be valuable, we need information to be fresh while considering network usage.

Fetching Data in the App

Widgets can be kept up to date with relevant and timely information by using data available locally or fetching it from a server. The server fetching can be initiated by the widget itself or by the host app. In our case, since the app doesn’t need the same information the widget needed, we decided it would make more sense to fetch it from the widget.

One benefit to how widgets are managed in the Android ecosystem over iOS is the flexibility. On iOS you have limited communication between the app and widget, whereas on Android there doesn’t seem to be the same restrictions. This becomes clear when we think about how we configure a widget. The widget configuration screen has access to all of the libraries and classes that our main app does. It’s no different than any other screen in the app. This is mostly true with the widget as well. We can access the resources contained in our main application, so we don’t need to duplicate any code. The only restrictions in a widget come with building views, which we’ll explore later.

When we save our configuration, we use shared preferences to persist data between the configuration screen and the widget. When a widget update is triggered, the shared preferences data for a given widget is used to build our request, and the results are displayed within the widget. We can read that data from anywhere in our app, allowing us to reuse this data in other parts of our app if desired.

Making the Widgets Antifragile

The widget architecture is built in a way that updates are mindful of battery usage, where updates are controlled by the system. In the same way, our widgets must also be mindful of saving bandwidth when fetching data over a network. While developing our second iteration, we came across a peculiar problem that was exacerbated by our specific use case. Since we need data to be fresh, we always pull new data from our backend on every update. Each update is approximately 15 minutes apart to avoid having our widgets stop updating. What we found is that widgets call their update method onUpdate(), more than once in an update cycle. In widgets like calendar, these extra calls come without much extra cost as the data is stored locally. However, in our app, this was triggering two to five extra network calls for the same widget with the same data in quick succession.

In order to correct the unnecessary roundtrips, we built a simple short-lived cache:

- The system asks our widget to provide new data from Reportify (Shopify’s data service)

- We first look into the local cache using the widgetID provided by the system.

- If there’s data, and that data was set less than one minute ago, we return it and avoid making a network request. We also take into account configuration such as locale so as not to avoid forcing updates after a language change.

- Otherwise, we fetch the data as normal and store it in the cache with the timestamp.

With this solution, we reduced unused network calls and system load and avoided collecting incorrect analytics.

Implementing Decoder Strategy with Dynamic Selections

We follow a similar approach as we have on iOS. We create a dynamic set of queries based on what the merchant has configured.

For each metric we have a corresponding definition implementation. This approach allows each metric the ability to have complete flexibility around what data it needs, and how it decodes the data from the response.

When Android asks us to update our widgets, we pull the merchants selection from our configuration object. Since each of the metric IDs has a definition, we map over them to create a dynamic set of queries.

We include an extension on our response object that binds the definitions to a decoder. Our service sends back an array of the response data corresponding to the queries made. We map over the original definitions, decoding each chunk to the expected return type.

Building the UI

Similar to iOS, we support three widget sizes for versions prior to Android 12 and follow the same rules for cell layout, except for the small widget. The small widget on Android supports a single metric (compared to the two on iOS) and the smallest widget size on Android is a 2x1 grid. We quickly found that only a single metric would fit in this space, so this design differs slightly between the platforms.

Unlike iOS with swift previews, we were limited to XML previews and running the widget on the emulator or device. We’re also building widgets dynamically, so even XML previews were relatively useless if we wanted to see an entire widget preview. Widgets are currently on the 2022 Jetpack Compose roadmap, so this is likely to change soon with Jetpack composable previews.

With the addition of dynamic layouts in Android 12, we created five additional sizes to support each size in between the original three. These new sizes are unique to Android. This also led to using grid sizes as part of our naming convention as you can see in our WidgetLayout enum below.

For the structure of our widget, we used an enum that acts as a blueprint to map the appropriate layout file to an area of our widget. This is particularly useful when we want to add a new widget because we simply need to add a new enum configuration.

To build the widgets dynamically, we read our configuration from shared preferences and provide that information to the RemoteViews API.

If you’re familiar with the RemoteViews API, you may notice the updateView() method, which is not a default RemoteViews method. We created this extension method as a result of an issue we ran into while building our widget layout in this dynamic manner. When a widget updates, the new remote views get appended to the existing ones. As you can probably guess, the widget didn’t look so great. Even worse, more remote views get appended on each subsequent update. We found that combining the two RemoteViews API methods removeAllViews() and addView() solved this problem.

Once we build our remote views, we then pass the parent remote view to the AppWidgetProvider updateAppWidget() method to display the desired layout.

It’s worth noting that we attempted to use partiallyUpdateAppWidget() to stop our remote views from appending to each other, but encountered the same issue.

Using Dynamic Dates

One important piece of information on our widget is the last updated timestamp. It helps remove confusion by allowing the merchants to quickly know how fresh the data is they are looking at. If the data is quite stale (say you went to the cottage for the weekend and missed a few updates) and there wasn’t a displayed timestamp, you would assume the data you’re looking at is up to the second. This can cause unnecessary confusion for our merchants. The solution here was to ensure there’s some communication to our merchant when the last update was made.

In our previous design, we only had small widgets, and they were able to display only one metric. This information resulted in a long piece of text that, on smaller devices, would sometimes wrap and show over two lines. This was fine when space was abundant in our older design but not in our new data rich designs. We explored how we could best work with timestamps on widgets, and the most promising solution was to use relative time. Instead of having a static value such as “as of 3:30pm” like our previous iteration. We would have a dynamic date that would look like: “1 min, 3 sec ago.”

One thing to remember is that even though the widget is visible, we have a limited number of updates we can trigger. Otherwise, it would be consuming a lot of unnecessary resources on the device. We knew we couldn’t keep triggering updates on the widget as often as we wanted. Android has a strategy for solving this with TextClock. However, there’s no support for relative time, which wouldn’t be useful in our use case. We also explored using Alarms, but potentially updating every minute would consume too much battery.

One big takeaway we had from these explorations was to always test your widgets under different conditions. Especially low battery or poor network. These surfaces are much more restrictive than general applications and the OS is much more likely to ignore updates.

We eventually decided that we wouldn’t use relative time and kept the widget’s refresh time as a timestamp. This way we have full control over things like date formatting and styling.

Adding Configuration

Our new widgets have a great deal of configuration options, allowing our merchants to choose exactly what they care about. For each widget size, the merchant can select the store, a certain number of metrics and a date range. This is the only part of the widget that doesn’t use RemoteViews, so there aren’t any restrictions on what type of View you may want to use. We share information between the configuration and the widget via shared preferences.

Working with Charts and Images

Android widgets are limited to RemoteViews as their building blocks and are very restrictive in terms of the view types supported. If you need to support anything outside of basic text and images, you need to be a bit creative.

Our widgets support both a sparkline and spark bar chart built using the MPAndroidCharts library. We have these charts already configured and styled in our main application, so the reuse here was perfect; except, we can’t use any custom Views in our widgets. Luckily, this library is creating the charts via drawing to the canvas, and we simply export the charts as a bitmap to an image view.

Once we were able to measure the widget, we constructed a chart of the required size, create a bitmap, and set it to a RemoteView.ImageView. One small thing to remember with this approach, is that if you want to have transparent backgrounds, you’ll have to use ARGB_8888 as the Bitmap Config. This simple bitmap to ImageView approach allowed us to handle any custom drawing we needed to do.

Eliminating Flickering

One minor, but annoying issue we encountered throughout the duration of the project is what we like to call “widget flickering.” Flickering is a side-effect of the widget updating its data. Between updates, Android uses the initialLayout from the widget’s configuration as a placeholder while the widget fetches its data and builds its new RemoteViews. We found that it wasn’t possible to eliminate this behavior, so we implemented a couple of strategies to reduce the frequency and duration of the flicker.

The first strategy is used when a merchant first places a widget on the home screen. This is where we can reduce the frequency of flickering. In an earlier section “Making the Widgets Antifragile,” we shared our short-lived cache. The cache comes into play because the OS will trigger multiple updates for a widget as soon as it’s placed on the home screen. We’d sometimes see a quick three or four flickers, caused by updates of the widget. After the widget gets its data for the first time, we prevent any additional updates from happening for the first 60 seconds, reducing the frequency of flickering.

The second strategy reduces the duration of a flicker (or how long the initialLayout is displayed). We store the widgets configuration as part of shared preferences each time it’s updated. We always have a snapshot of what widget information is currently displayed. When the onUpdate() method is called, we invoke a renderEarlyFromCache() method as early as possible. The purpose of this method is to build the widget via shared preferences. We provide this cached widget as a placeholder until the new data has arrived.

Gathering Analytics

Since our first widgets were developed, we added strategic analytics in key areas so that we could understand how merchants were using the functionality. This allowed us to learn from the usage so we could improve on them. The data team built dashboards displaying detailed views of how many widgets were installed, the most popular metrics, and sizes.

Most of the data used to build the dashboards came from analytics events fired through the widgets and the Shopify app.

For these new widgets, we wanted to better understand adoption and retention of widgets, so we needed to capture how users are configuring their widgets over time and which ones are being added or removed.

Detecting Addition and Removal of Widgets

Unlike iOS, capturing this data in Android is straight-forward. To capture when a merchant adds a widget, we send our analytical event when the configuration is saved. When removing a widget, the widgets built-in onDeleted method gives us the widget ID of the removed widget. We can then look up our widget information in shared preferences and send our event prior permanently deleting the widget information from the device.

Supporting Android 12

When we started development of our new widgets, our application was targeting Android 11. We knew we’d be targeting Android 12 eventually, but we didn’t want the upgrade to block the release. We decided to implement Android 12 specific features once our application targeted the newer version, leading to an unforeseen issue during the upgrade process with widget selection.

Our approach to widget selection in previous versions was to display each available size as an option. With the introduction of responsive layouts, we no longer needed to display each size as its own option. Merchants can now pick a single widget and resize to their desired layout. In previous versions, merchants can select a small, medium, and large widget. In versions 12 and up, merchants can only select a single widget that can be resized to the same layouts as small, medium, large, and several other layouts that fall in between. This pattern follows what Google does with their large weather widget included on devices, as well as an example in their documentation. We disabled the medium and small widgets in Android 12 by adding a flag to our AndroidManifest and setting that value in attrs.xml for each version:

The approach above behaves as expected, the medium and small widgets were now unavailable from the picker. However, if a merchant was already on Android 12 and had added a medium or large widget before our widget update, those widgets were removed from their home screen. This could easily be seen as a bug and reduce confidence in the feature. In retrospect, we could have prototyped what the upgrade would have looked like to a merchant who was already on Android 12.

Allowing only the large widget to be available was a data-driven decision. By tracking widget usage at launch, we saw that the large widget was the most popular and removing the other two would have the least impact on current widget merchants.

Building New Layouts

We encountered an error when building the new layouts that fit between the original small, medium and large widgets.

After researching the error, we were exceeding the Binder transaction buffer. However, the buffer’s size is 1mb and the error displayed .66mb, which wasn’t exceeding the documented buffer size. The error has appeared to stump a lot of developers. After experimenting with ways to get the size down, we found that we could either drop a couple of entire layouts or remove support for a fourth and fifth row of the small metric. We decided on the latter, which is why our 2x3 widget only has three rows of data when it has room for five.

Rethinking the Configuration Screen

Now that we have one widget to choose from, we had to rethink what our configuration screen would look like to a merchant. Without our three fixed sizes, we could no longer display a fixed number of metrics in our configuration.

Our only choice was to display the maximum number of metrics available for the largest size (which is seven at the time of this writing). Not only did this make the most sense to us from a UX perspective, but we also had to do it this way because of how the new responsive layouts work. Android has to know all of the possible layouts ahead of time. Even if a user shrinks their widget to a size that displays a single metric, Android has to know what the other six are, so it can be resized to our largest layout without any hiccups.

We also updated the description that’s displayed at the top of the configuration screen that explains this behavior.

Capturing More Analytics

On iOS, we capture analytical data when a merchant reconfigures a widget to gain insights into usage patterns. Reconfiguration for Android was only possible in version 12 and due to the limitations of the AppWidgetProvider’s onAppWidgetOptionsChanged() method, we were unable to capture this data on Android.

I’ll share more information about building our layouts in order to give context to our problem. Setting breakpoints for new dynamic layouts works very well across all devices. Google recommends creating a mapping of your breakpoints to the desired remote view layout. To build on a previous example where we showed the buildWidgetRemoteView() method, we used this method again as part of our breakpoint mapping. This approach allows us to reliably map our breakpoints to the desired widget layout.

When reconfiguring or resizing a widget, the onAppWidgetOptionsChanged() method is called. This is where we’d want to capture our analytical data about what had changed. Unfortunately, this view mapping doesn’t exist here. We have access to width and height values that are measured in dp, initially appearing to be useful. At first, we felt that we could discern these measurements into something meaningful and map these values back to our layout sizes. After testing on a couple of devices, we realized that the approach was unreliable and would lead to a large volume of bad analytical data. Without confidently knowing what size we are coming from, or going to, we decided to omit this particular analytics event from Android. We hope to bring this to Google’s attention, and get it included in a future release.

Shipping New Widgets

Already having a pair of existing widgets, we had to decide how to transition to the new widgets as they would be replacing the existing implementation.

We didn’t find much documentation around migrating widgets. The docs only provided a way to enhance your widget, which means adding the new features of Android 12 to something you already had. This wasn’t applicable to us since our existing widgets were so different from the ones we were building.

The major issue that we couldn’t get around was related to the sizing strategies of our existing and new widgets. The existing widgets used a fixed width and height so they’d always be square. Our new widgets take whatever space is available. There wasn’t a way to guarantee that the new widget would fit in the available space that the existing widget had occupied. If the existing widget was the same size as the new one, it would have been worth exploring further.

The initial plan we had hoped for, was to make one of our widgets transform into the new widget while removing the other one. Given the above, this strategy would not work.

The compromise we came to, so as to not completely remove all of a merchant’s widgets overnight, was to deprecate the old widgets at the same time we release the new one. To deprecate, we updated our old widget’s UI to display a message informing that the widget is no longer supported and the merchant must add the new ones.

There’s no way to add a new widget programmatically or to bring the merchant to the widget picker by tapping on the old widget. We added some communication to help ease the transition by updating our help center docs, including information around how to use widgets, pointing our old widgets to open the help center docs, and just giving lots of time before removing the deprecation message. In the end, it wasn’t the most ideal situation and we came away learning about the pitfalls within the two ecosystems.

What’s Next

As we continue to learn about how merchants use our new generation of widgets and Android 12 features, we’ll continue to hone in on the best experience across both our platforms. This also opens the way for other teams at Shopify to build on what we’ve started and create more widgets to help Merchants.

On the topic of mobile only platforms, this leads us into getting up to speed on WearOS. Our WatchOS app is about to get a refresh with the addition of Widgetkit; it feels like a great time to finally give our Android Merchants watch support too!

Matt Bowen is a mobile developer on the Core Admin Experience team. Located in West Warwick, RI. Personal hobbies include exercising and watching the Boston Celtics and New England Patriots.

James Lockhart is a Staff Developer based in Ottawa, Canada. Experiencing mobile development over the past 10+ years: from Phonegap to native iOS/Android and now React native. He is an avid baker and cook when not contributing to the Shopify admin app.

Cecilia Hunka is a developer on the Core Admin Experience team at Shopify. Based in Toronto, Canada, she loves live music and jigsaw puzzles.

Carlos Pereira is a Senior Developer based in Montreal, Canada, with more than a decade of experience building native iOS applications in Objective-C, Swift and now React Native. Currently contributing to the Shopify admin app.

Wherever you are, your next journey starts here! If building systems from the ground up to solve real-world problems interests you, our Engineering blog has stories about other challenges we have encountered. Intrigued? Visit our Engineering career page to find out about our open positions and learn about Digital by Design.

Lessons From Building iOS Widgets

By Carlos Pereira, James Lockhart, and Cecilia Hunka

When the iOS 14 beta was originally announced, we knew we needed to take advantage of the new widget features and get something to our merchants. The new widgets looked awesome and could really give merchants a way to see their shop’s data at a glance without needing to open our app.

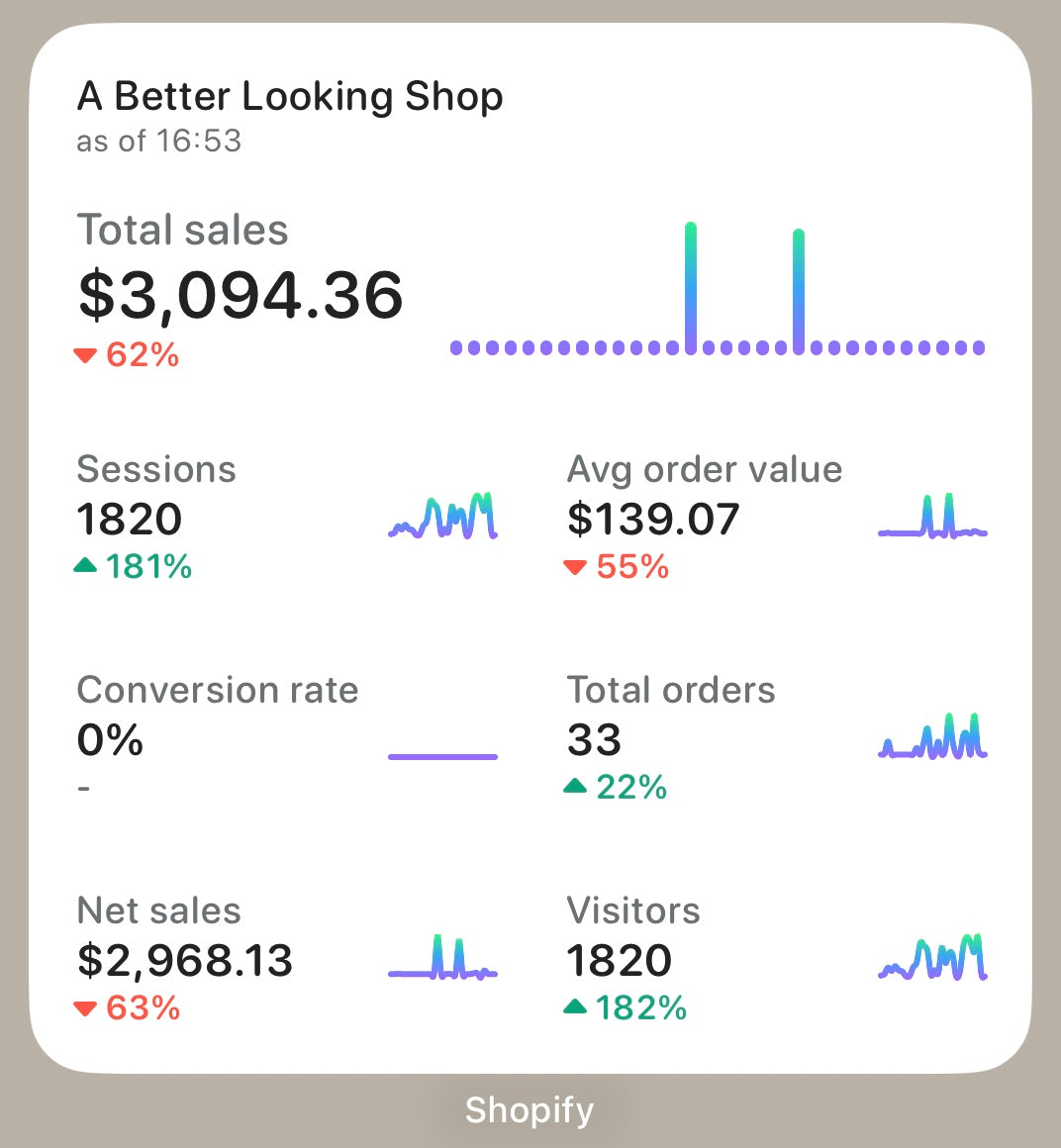

Fast forward a couple of years, and we now have lots of feedback from the new design. We knew merchants were using them, but they needed more. The current design was lacking and only provided two metrics—also, they took up a lot of space. This experience prompted us to start a new project. To upgrade our original design to better fit our merchant’s needs.

Table of Contents

Why Widgets Are Important to Shopify

Our widgets mainly focus on analytics. Analytics can help merchants understand how they’re doing and gain insights to make better decisions quickly about their business. Monitoring metrics is a daily activity for a lot of our merchants, and on mobile, we have the opportunity to give merchants a faster way to access this data through widgets. As widgets provide access to “at a glance” information about your shop and allow merchants a unique avenue to quickly get a pulse on their shops that they wouldn’t find on desktop.

After gathering feedback and continuously looking for opportunities to enhance our widget capabilities, we’re at our third iteration, and we’ll share with you how we approached building widgets and some of the challenges we faced.

Why We Didn’t Use React Native

A couple years ago Shopify decided to go all in on React Native. New development was done in React Native and we began migrating some apps to the new stack. Including our flagship admin app, where we were building our widgets. Which posed the question, should we write the widgets in React Native?

After doing some investigation we quickly hit some roadblocks: app extensions are limited in terms of memory, WidgetKit’s architecture is highly optimized to work with SwiftUI as the view hierarchy is serialized to disk, there’s also, at this time, no official support in the React Native community for widgets.

Shopify believes in using the right tool for the job, we believe that native development with SwiftUI was the best choice in this case.

Building the Widgets

When building out our architecture for widgets, we wanted to create a consistent experience on both iOS and Android while preserving platform idioms where it made sense. Below we’ll go over our experience and strategies building the widgets, pointing out some of the more difficult challenges we faced. Our aim is to shed some light around these less talked about surfaces, give some inspiration for your projects, and hopefully, save time when it comes to implementing your widgets.

Fetching Data

Some types of widgets have data that change less frequently (for example, reminders) and some that can be forecasted for the entire day (for example, Calendar and weather). In our case, the merchants need up-to-date metrics about their business, so we need to show data as fresh as possible. Time for our widget is crucial. Delays in data can cause confusion, or even worse, delay information that could inform a business decision. For example, let’s say you watch the stocks app. You would expect the stock app and its corresponding widget data to be as up to date as possible. If the data is multiple hours stale, you could miss valuable information for making decisions or you could miss an important drop or rise in price. With our product, our merchants need as up to date information as we can provide them to run their business.

Fetching Data in the App

Widgets can be kept up to date with relevant and timely information by using data available locally or fetching it from a server. The server fetching can be initiated by the widget itself or by the host app. In our case, since the app doesn’t share the same information as the widget, we decided it made more sense to fetch it from the widget.

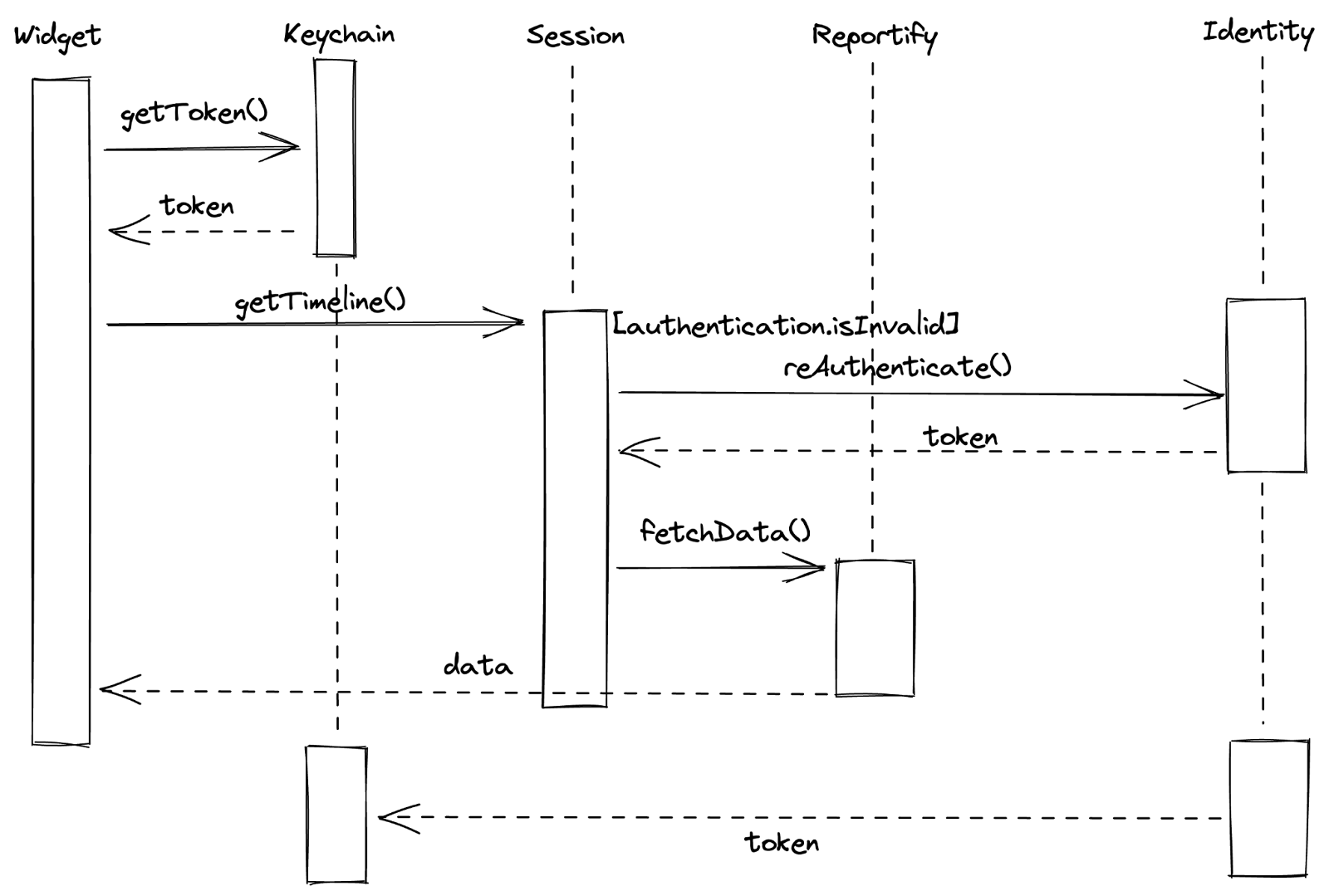

We still consider moving data fetching to the app once we start sharing similar data between widgets and the app. This architecture could simplify the handling of authentication, state management, updating data, and caching in our widget since only one process will have this job rather than two separate processes. It’s worth noting that the widget can access code from the main app, but they can only communicate data through keychain and shared user defaults as widgets run on separate processes. Sharing the data fetching, however, comes with an added complexity of having a background process pushing or making data available to the widgets, since widgets must remain functional even if the app isn’t in the foreground or background. For now, we’re happy with the current solution: the widgets fetch data independently from the app while sharing the session management code and tokens.

Querying Business Analytics Data with Reportify and ShopifyQL

The business data and visualizations displayed in the widgets are powered by Reportify, an in-house service that exposes data through a set of schemas queried via ShopifyQL, Shopify’s Commerce data querying language. It looks very similar to SQL but is designed around data for commerce. For example, to fetch a shops total sales for the day:

Making Our Widgets Antifragile

iOS Widgets' architecture is built in a way that updates are mindful of battery usage and are budgeted by the system. In the same way, our widgets must also be mindful of saving bandwidth when fetching data over a network. While developing our second iteration we came across a peculiar problem that was exacerbated by our specific use case.

Since we need data to be fresh, we always pull new data from our backend on every update. Each update is approximately 15 minutes apart to avoid having our widgets stop updating (which you can read about why on Apple’s Developer site). We found that iOS calls the update methods, getTimeline() and getSnapshot(), more than once in an update cycle. In widgets like calendar, these extra calls come without much extra cost as the data is stored locally. However, in our app, this was triggering two to five extra network calls for the same widget with the same data in quick succession.

We also noticed these calls were causing a seemingly unrelated kick out issue affecting the app. Each widget runs on a different process than the main application, and all widgets share the keychain. Once the app requests data from the API, it checks to see if it has an authenticated token in the keychain. If that token is stale, our system pauses updates, refreshes the token, and continues network requests. In the case of our widgets, each widget call to update was creating another workflow that could need a token refresh. When we only had a single widget or update flow, it worked great! Even four to five updates would usually work pretty well. However, eventually one of these network calls would come out of order and an invalid token would get saved. On our next update, we have no way to retrieve data or request a new token resulting in a session kick out. This was a great find as it was causing a lot of frustration for our affected merchants and ourselves, who could never really put our finger on why these things would, every now and again, just log us out.

In order to correct the unnecessary roundtrips, we built a simple short-lived cache:

- The system asks our widget to provide new data

- We first look into the local cache using a key specific to that widget. On iOS, our key is produced from a configuration for that widget as there’s no unique identifiers provided. We also take into account configuration such as locale so as not to avoid forcing updates after a language change.

- If there’s data, and that data was set less than one minute ago, we return it and avoid making a network request.

- Otherwise, we fetch the data as normal and store it in the cache with the timestamp.

With this solution, we reduced unused network calls and system load, avoided collecting incorrect analytics, and fixed a long running bug with our app kick outs!

Implementing Decoder Strategy with Dynamic Selections

When fetching the data from the Analytics REST service, each widget can be configured with two to seven metrics from a total of 12. This set should grow in the future as new metrics are available too! Our current set of metrics are all time-based and have a similar structure.

But that doesn’t mean the structure of future metrics will not change. For example, what about a metric that contains data that isn’t mapped over a time range? (like orders to fulfill, which does not contain any historical information).

The merchant is also able to configure the order the metrics appear, which shop (if they have more than one shop), and which date range represents the data: today, last 7 days, and last 30 days.

We had to implement a data fetching and decoding mechanism that:

- only fetches the data the merchant requested in order to avoid asking for unneeded information

- supports a set of metrics as well as being flexible to add future metrics with different shapes

- supports different date ranges for the data.

A simplified version of the solution is shown below. First, we create a struct to represent the query to the analytics service (Reportify).

Then, we create a class to represent the decodable response. Right now it has a fixed structure (value, comparison, and chart values), but in the future we can use an enum or different subclasses to decode different shapes.

Next, we create a response wrapper that attempts to decode the metrics based on a list of metric types passed to it. Each metric has its configuration, so we know which class is used to read the values.

Finally, when the widget Timeline Provider asks for new data, we fetch the data from the current metrics and decode the response.

Building the UI

We wanted to support the three widget sizes: small, medium, and large. From the start we wanted to have a single View to support all sizes as an attempt to minimize UI discrepancies and make the code easy to maintain.

We started by identifying the common structure and creating components. We ended up with a Metric Cell component that has three variations:

All three variations consist of a metric name and value, chart, and a comparison. As the widget containers become bigger, we show the merchant more data. Each view size contains more metrics, and the largest widget contains a full width chart on the first chosen metric. The comparison indicator also gets shifted from bottom to right on this variation.

The first chosen metric, on the large widget, is shown as a full width cell with a bar chart showing the data more clearly; we call it the Primary cell. We added a structure to indicate if a cell is going to be used as primary or not. Besides the primary flag, our component doesn’t have any context about the widget size, so we use chart data as an indicator to render a cell primary or not. This paradigm fits very well with SwiftUI.

A simplified version of the actual Cell View:

After building our cells, we need to create a structure to render them in a grid according to the size and metrics chosen by the merchant. This component also has no context of the widget size, so our layout decisions are mainly based on how many metrics we are receiving. In this example, we’ll refer to the View as a WidgetView.

The WidgetView is initialized with a WidgetState, a struct that holds most of the widget data such as shop information, the chosen metrics and their data, and a last updated string (which represents the last time the widget was updated).

To be able to make decisions on layout based on the widget characteristics, we created an OptionSet called LayoutOption. This is passed as an array to the WidgetView.

Layout options:

That helped us not to tie this component to Widget families, rather to layout characteristics that makes this component very reusable in other contexts.

The WidgetView layout is built using mainly a LazyVGrid component:

A simplified version of the actual View:

Adding Dynamic Dates

One important piece of information on our widget is the last updated timestamp. It helps remove confusion by allowing merchants too quickly know how fresh the data is they’re looking at. Since iOS has an approximate update time with many variables, coupled with data connectivity, it’s very possible the data could be over 15 minutes old. If the data is quite stale (say you went to the cottage for the weekend and missed a few updates) and there was no update string, you would assume the data you’re looking at is up to the second. This can cause unnecessary confusion for our merchants. The solution here was to ensure there’s some communication to our merchant when the last update was.

In our previous design, we only had small widgets, and they were able to display only one metric. This information resulted in a long string, that on smaller devices, would sometimes wrap and show over two lines. This was fine when space was abundant in our older design but not in our new data rich designs. We explored how we could best work with timestamps on widgets, and the most promising solution was to use relative time. Instead of having a static value such as “as of 3:30pm” like our previous iteration, we would have a dynamic date that would look like: “1 min, 3 sec ago.”

One thing to remember is that even though the widget is visible, we have a limited number of updates we can trigger. Otherwise, it would be consuming a lot of unnecessary resources on the merchant’s device. We knew we couldn’t keep triggering updates on the widget as often as we wanted (nor would it be allowed), but iOS has ways to deal with this. Apple did release support for dynamic text on widgets during our development that allowed using timers on your widgets without requiring updates. We simply need to pass a style to a Text component and it automatically keeps everything up to date:

Text("\(now, style: .relative) ago")

It was good, but we have no options to customize the relative style. Being able to customize the relative style was an important point for us, as the current supported style does not fit well with our widget layout. One of our biggest constraints with widgets is space as we always need to think about the smallest widget possible. In the end we decided not to move forward with the relative time approach, and kept a reduced version of our previous timestamp.

Adding Configuration

Our new widgets have a great amount of configuration, allowing for merchants to choose exactly what they care about. For each widget size, the merchant can select the store, a certain number of metrics, and a date range. On iOS, widgets are configured through the SiriKit Intents API. We faced some challenges with the WidgetConfiguration, but fortunately, all had workarounds that fit our use cases.

It’s Not Possible to Deselect a Metric

When defining a field that has multiple values provided dynamically, we can limit the number of options per widget family. This was important for us, since each widget size has a different number of metrics it can support. However, the current UI on iOS for widget configuration only allows selecting a value but not deselecting it. So, once we selected a metric we couldn’t remove it, only update the selection. But what if the merchant were only interested in one metric on the small widget? We solved this with a small design change, by providing “None” as an option. If the merchant were to choose this option, it would be ignored and shown as an empty state.

It's not possible to validate the user selections

With the addition of “None” and the way intents are designed, it was possible to select all “None” and have a widget with no metrics. In addition, it was possible to select the same metric twice.. We would like to be able to validate the user selection, but the Intents API didn't support it. The solution was to embrace the fact that a widget can be empty and show as an empty state. Duplicates were filtered out so any more than a single metric choice was changed to “None” before we sent any network requests.

The First Calls to getTimeline and getSnapshot Don’t Respect the Maximum Metric Count

For intent configurations provided dynamically, we must provide default values in the IntentHandler. In our case, the metrics list varies per widget family. In the IntentHandler, it’s not possible to query which widget family is being used. So we had to return at least as many metrics as the largest widget (seven).

However, even if we limit the number of metrics per family, the first getTimeline and getSnapshot calls in the Timeline Provider were filling the configuration object with all default metrics, so a small widget would have seven metrics instead of two!

We ended up adding some cleanup code in the beginning of the Timeline Provider methods that trims the list depending on the expected number of metrics.

Optimizing Testing

Automated tests are a fundamental part of Shopify’s development process. In the Shopify app, we have a good amount of unit and snapshot tests. The old widgets on Android had good test coverage already, and we built on the existing infrastructure. On iOS, however, there were no tests since it’s currently not possible to add test targets against a widget extension on Xcode.

Given this would be a complex project and we didn’t want to compromise on quality, we investigated possible solutions for it.

The simplest solution would be to add each file on both the app and in the widget extension targets, then we could unit test it in the app side in our standard test target. We decided not to do this since we would always need to add a file to both targets, and it would bloat the Shopify app unnecessarily.

We chose to create a separate module (a framework in our case) and move all testable code there. Then we could create unit and snapshot tests for this module.

We ended up moving most of the code, like views and business logic, to this new module (WidgetCore), while the extension only had WidgetKit specific code and configuration like Timeline provider, widget bundle, and intent definition generated files.

Given our code in the Shopify app is based on UIKit, we did have to update our in-house snapshot testing framework to support SwiftUI views. We were very happy with the results. We ended up achieving a high test coverage, and the tests flagged many regressions during development.

Fast SwiftUI Previews

The Shopify app is a big application, and it takes a while to build. Given the widget extension is based on our main app target, it took a long time to prepare the SwiftUI previews. This caused frustration during development. It also removed one of the biggest benefits of SwiftUI—our ability to iterate quickly with Previews and the fast feedback cycle during UI development.

One idea we had was to create a module that didn’t rely on our main app target. We created one called WidgetCore where we put a lot of our reusable Views and business logic. It was fast to build and could also render SwiftUI previews. The one caveat is, since it wasn’t a widget extension target, we couldn’t leverage the WidgetPreviewContext API to render views on a device. It meant we needed to load up the extension to ensure the designs and changes were always working as expected on all sizes and modes (light and dark).

To solve this problem, we created a PreviewLayout extension. This had all the widget sizes based on the Apple documentation, and we were able to use it in a similar way:

Our PreviewLayout extension would be used on all of our widget related views in our WidgetCore module to emulate the sizes in previews:

Acquiring Analytics

Since our first widgets were developed, we wanted to understand how merchants are using the functionality, so we can always improve it. The data team built some dashboards showing things like a detailed view of how many widgets installed, the most popular metrics, and sizes.

Most of the data used to build the dashboards come from analytics events fired through the widgets and the Shopify app.

For the new widgets, we wanted to better understand adoption and retention of widgets, so we needed to capture how users are configuring their widgets over time and which ones are being added or removed.

Managing Unique Ids

WidgetKit has the WidgetCenter struct that allows requesting information about the widgets currently configured in the device through the getCurrentConfigurations method. However, the list of metadata returned (WidgetInfo) doesn’t have a stable unique identifier. Its identifier is the object itself, since it’s hashable. Given this constraint, if two identical widgets are added, they’ll both have the same identifier. Also, given the intent configuration is part of the id, if something changes (for example, date range) it’ll look like it’s a totally different widget.

Given this limitation, we had to adjust the way we calculate the number of unique widgets. It also made it harder to distinguish between different life-cycle events (adding, removing, and configuring). Hopefully there will be a way to get unique ids for widgets in future versions of iOS. For now we created a single value derived from the most important parts of the widget configuration.

Detecting, Adding, and Removing Widgets

Currently there’s no WidgetKit life cycle method that tells us when a widget was added, configured, or removed. We needed it so we can better understand how widgets are being used.

After some exploration, we noticed that the only methods we could count on were getTimeline and getSnapshot. We then decided to build something that could simulate these missing life cycle methods by using the ones we had available. getSnapshot is usually called on state transitions and also on the widget Gallery, so we discarded it as an option.

We built a solution that did the following

- Every time the Timeline providers’

getTimelineis called, we call WidgetKit’s getCurrentConfigurations to see what are the current widgets installed. - We then compare this list with a previous snapshot we persist on disk.

- Based on this comparison we try to guess which widgets were added and removed.

- Then we triggered the proper life cycle methods:

didAddWidgets(),didRemoveWidgets().

Due to identifiers not being stable, we couldn’t find a reliable approach to detect configuration changes, so we ended up not supporting it.

We also noticed that WidgetKit.getCurrentConfigurations’s results can have some delay. If we remove a widget, it may take a couple getTimeline calls for it to be reflected. We adjusted our analytics scheme to take that into account.

Supporting iOS 16

Our approach to widgets made supporting iOS 16 out of the gate really simple with a few changes. Since our lock screen complications will surface the same information as our home screen widgets, we can actually reuse the Intent configuration, Timeline Provider, and most of the views! The only change we need to make is to adjust the supported families to include .accessoryInline, .accessoryCircular, and .accessoryRectangular, and, of course, draw those views.

Our Main View would also just need a slight adjustment to work with our existing home screen widgets.

Migrating Gracefully

WidgetKit was introduced for watchOS complications in iOS 16. This update comes with a foreboding message from Apple:

Important

As soon as you offer a widget-based complication, the system stops calling ClockKit APIs. For example, it no longer calls your CLKComplicationDataSource object’s methods to request timeline entries. The system may still wake your data source for migration requests.

We really care about our apps at Shopify, so we really needed to unpack what this meant, and how does this affect our merchants running older devices? With some testing on devices, we were able to find out, everything is fine.

If you’re currently running WidgetKit complications and add support for lock screen complications, your ClockKit app and complications will continue to function as you’d expect.

What we had assumed was that WidgetKit itself was taking the place of WatchOS complications; however, to use Widgetkit on WatchOS, you need to create a new target for the Watch. This makes sense, although the APIs are so similar we had assumed it was a one and done approach. One WidgetKit extension for both platforms.

One thing to watch out for, if you do implement the new WidgetKit on WatchOS, if your users are on WatchOS 9 and above will lose all of their complications from ClockKit. Apple did provide a migration API to support the change that’s called instead of your old complications.

If you don’t have the luxury of just setting your target to iOS 16, your complications will continue to load up for those on WatchOS 8 and below from our testing.

Shipping New Widgets

We already had a set of widgets running on both platforms, now we had to decide how to transition to the new update as they would be replacing the existing implementation. On iOS we had two different widget kinds each with their own small widget (you can think of kinds as a widget group). With the new implementation, we wanted to provide a single widget kind that offered all three sizes. We didn’t find much documentation around the migration, so we simulated what happens to the widgets under different scenarios.

If the merchant has a widget on their home screen and the app updates, one of two things would happen:

- The widget would become a white blank square (the kind IDs matched).

- The widget just disappeared altogether (the kind ID was changed).

The initial plan (we had hoped for) was to make one of our widgets transform into the new widget while removing the other one. Given the above, this strategy wouldn’t work. This also includes some annoying tech debt since all of our Intent files would continue to mention the name of the old widget.

The compromise we came to, so as to not completely remove all of a merchant’s widgets overnight, was to deprecate the old widgets at the same time we release the new ones. To deprecate, we updated our old widget’s UI to display a message informing that the widget is no longer supported, and the merchant must add the new ones. The lesson here is you have to be careful when you make decisions around widget grouping as it’s not easy to change.

There’s no way to add a new widget programmatically or to bring the merchant to the widget gallery by tapping on the old widget. We also added some communication to help ease the transition by:

- updating our help center docs, including information around how to use widgets

- pointing our old widgets to open the help center docs

- giving lots of time before removing the deprecation message.

In the end, it wasn’t the most ideal situation, and we came away learning about the pitfalls within the two ecosystems. One piece of advice is to really reflect on current and future needs when defining which widgets to offer and how to split them, since a future modification may not be straightforward.

What’s Next

As we continue to learn about how merchants use our new generation of widgets, we’ll continue to hone in on the best experience across both our platforms. Our widgets were made to be flexible, and we’ll be able to continually grow the list of metrics we offer through our customization. This work opens the way for other teams at Shopify to build on what we’ve started and create more widgets to help Merchants too.

2022 is a busy year with iOS 16 coming out. We’ve got a new WidgetKit experience to integrate to our watch complications, lock screen complications, and live activities hopefully later this year!

Carlos Pereira is a Senior Developer based in Montreal, Canada, with more than a decade of experience building native iOS applications in Objective-C, Swift and now React Native. Currently contributing to the Shopify admin app.

James Lockhart is a Staff Developer based in Ottawa, Canada. Experiencing mobile development over the past 10+ years: from Phonegap to native iOS/Android and now React native. He is an avid baker and cook when not contributing to the Shopify admin app.

Cecilia Hunka is a developer on the Core Admin Experience team at Shopify. Based in Toronto, Canada, she loves live music and jigsaw puzzles.

Wherever you are, your next journey starts here! If building systems from the ground up to solve real-world problems interests you, our Engineering blog has stories about other challenges we have encountered. Intrigued? Visit our Engineering career page to find out about our open positions and learn about Digital by Design.

Instant Performance Upgrade: From FlatList to FlashList

When was the last time you scrolled through a list? Odds are it was today, maybe even seconds ago. Iterating over a list of items is a feature common to many frameworks, and React Native’s FlatList is no different. The FlatList component renders items in a scrollable view without you having to worry about memory consumption and layout management (sort of, we’ll explain later).

The challenge, as many developers can attest to, is getting FlatList to perform on a range of devices without display artifacts like drops in UI frames per second (FPS) and blank items while scrolling fast.

Our React Native Foundations team solved this challenge by creating FlashList, a fast and performant list component that can be swapped into your project with minimal effort. The requirements for FlashList included

- High frame rate: even when using low-end devices, we want to guarantee the user can scroll smoothly, at a consistent 60 FPS or greater.

- No more blank cells: minimize the display of empty items caused by code not being able to render them fast enough as the user scrolls (and causing them to wonder what’s up with their app).

- Developer friendly: we wanted FlashList to be a drop-in replacement for FlatList and also include helpful features beyond what the current alternatives offer.

The FlashList API is five stars. I've been recommending all my friends try FlashList once we open source.

FlatList vs FlashList. This is from the exact same sample. Almost 7.5x better JS FPS!

— Talha Naqvi (@naqvitalha) July 13, 2022

When items are complex or there are more to update per render, the differences become a lot more obvious.

This is on Moto G, scroll speed is as fast as possible. pic.twitter.com/eT7LGOjHGE

Here’s how we approached the FlashList project and its benefits to you.

The Hit List: Why the World Needs Faster Lists

Evolving FlatList was the perfect match between our mission to continuously create powerful components shared across Shopify and solving a difficult technical challenge for React Native developers everywhere. As more apps move from native to React Native, how could we deliver performance that met the needs of today’s data-hungry users while keeping lists simple and easy to use for developers?

Lists are in heavy use across Shopify, in everything from Shop to POS. For Shopify Mobile in particular, where over 90% of the app uses native lists, there was growing demand for a more performant alternative to FlatList as we moved more of our work to React Native.

What About RecyclerListView?

RecyclerListView is a third-party package optimized for rendering large amounts of data with very good real-world performance. The difficulty lies in using it, as developers must spend a lot of time understanding how it works, playing with it, and requiring significant amounts of code to manage. For example, RecyclerListView needs predicted size estimates for each item and if they aren’t accurate, the UI performance suffers. It also renders the entire layout in JavaScript, which can lead to visible glitches.

When done right, RecyclerListView works very well! But it’s just too difficult to use most of the time. It’s even harder if you have items with dynamic heights that can’t be measured in advance.

So, we decided to build upon RecyclerListView and solve these problems to give the world a new list library that achieved native-like performance and was easy to use.

The Bucket List: Our Approach to Improving React Native Lists

Our React Native Foundations team takes a structured approach to solving specific technical challenges and creates components for sharing across Shopify apps. Once we’ve identified an ambitious problem, we develop a practical implementation plan that includes rigorous development and testing, and an assessment of whether something should be open sourced or not.

Getting list performance right is a popular topic in the React Native community, and we’d heard about performance degradations when porting apps from native to React Native within Shopify. This was the perfect opportunity for us to create something better. We kicked off the FlashList project to build a better list component that had a similar API to FlatList and boosted performance for all developers. We also heard some skepticism about its value, as some developers rarely saw issues with their lists on newer iOS devices.

The answer here was simple. Our apps are used on a wide range of devices, so developing a performant solution across devices based on a component that was already there made perfect sense.

“We went with a metrics-based approach for FlashList rather than subjective, perception-based feelings. This meant measuring and improving hard performance numbers like blank cells and FPS to ensure the component worked on low-end devices first, with high-end devices the natural byproduct.” - David Cortés, React Native Foundations team

Just replaced Flatlist for Flashlist and wow, that thing is really fast @ShopifyDevs well done 🎉🥳👏🎊

— João Amadeu (@jmamadeu) July 4, 2022

Improved Performance and Memory Utilization

Our goal was to beat the performance of FlatList by orders of magnitude, measured by UI thread FPS and JavaScript FPS. With FlatList, developers typically see frame drops, even with simple lists. With FlashList, we improved upon the FlatList approach of generating new views from scratch on every update by using an existing, already allocated view and refreshing elements within it (that is, recycling cells). We also moved some of the layout operations to native, helping smooth over some of the UI glitches seen when RecyclerListView is provided with incorrect item sizes.

This streamlined approach boosted performance to 60 FPS or greater—even on low-end devices!

Simple list comparison (Twitter clone) @ShopifyEng FlashList vs @reactnative FlatList

— Alexandre Moureaux (@almouro) July 1, 2022

on a low end Android

On the left, trying my best to scroll the FlatList 😅: the scroll locks because JS is dead! ☠️

Right side: no locking! ✅

Will post #performance metrics next week 📈 pic.twitter.com/IW8Ly5c1jD

We applied a similar strategy to improve memory utilization. Say you have a Twitter feed with 200-300 tweets per page, FlatList starts rendering with a large number of items to ensure they’re available as the user scrolls up or down. FlashList, in comparison, requires a much smaller buffer which reduces memory footprint, improves load times, and keeps the app significantly more responsive. The default buffer is just 250 pixels.

Both these techniques, along with other optimizations, help FlashList achieve its goal of no more blank cells, as the improved render times can keep up with user scrolling on a broader range of devices.

Shopify Mobile is using FlashList as the default and the Shop team moved search to FlashList. Multiple teams have seen major improvements and are confident in moving the rest of their screens.

These performance improvements included extensive testing and benchmarking on Android devices to ensure we met the needs of a range of capabilities. A developer may not see blank items or choppy lists on the latest iPhone but that doesn’t mean it’ll work the same on a lower-end device. Ensuring FlashList was tested and working correctly on more cost-effective devices made sure that it would work on the higher-end ones too.

Developer Friendly

If you know how FlatList works, you know how FlashList works. It’s easy to learn, as FlashList uses almost the same API as FlatList, and has new features designed to eliminate any worries about layout and performance, so you can focus on your app’s value.

Shotgun's main feature is its feed, so ensuring consistent and high-quality performance has always been crucial. That's why using FlashList was a no brainer. I love that compared to the classic FlatList, you can

scrollToIndexto an index that is not within your view. This allowed us to quickly develop our new event calendar list, where users can jump between dates to see when and where there are events.

The fact that FlashList's API is exactly the same as FlatList is an application that will make it very easy to move your project to FlashList. In addition, they have added a few features, for example: You can see how long it takes to load the list thanks to the onLoad feature. pic.twitter.com/Izkwyte2Xj

— V. Furkan Güner (@vfurkanguner) July 4, 2022

It takes seconds to swap your existing list implementation from FlatList to FlashList. All you need to do is change the component name and optionally add the estimatedItemSize prop, as you can see in this example from our documentation:

Powerful Features

Going beyond the standard FlatList props, we added new features based on common scenarios and developer feedback. Here are three examples:

- getItemType: improves render performance for lists that have different types of items, like text vs. image, by leveraging separate recycling pools based on type.

- Flexible column spans: developers can create grid layouts where each item’s column span can be set explicitly or with a size estimate (using overrideItemLayout), providing flexibility in how the list appears.

- Performance metrics: as we moved many layout functions to native, FlashList can extract key metrics for developers to measure and troubleshoot performance, like reporting on blank items and FPS. This guide provides more details.

Documentation and Samples

We provide a number of resources to help you get started with FlashList:

- FlashList developer portal

- FlashList documentation

- Example app using FlashList

- How to use estimatedItemSize

- How to write performant components

Flash Forward with FlashList

Accelerate your React Native list performance by installing FlashList now. It’s already deployed within Shopify Mobile, Shop, and POS and we’re working on known issues and improvements. We recommend starring the FlashList repo and can’t wait to hear your feedback!

Want to read more about the process of launching this open source project? Check out our follow-up post, What We Learned from Open-Sourcing FlashList.

Wherever you are, your next journey starts here! If building systems from the ground up to solve real-world problems interests you, our Engineering blog has stories about other challenges we have encountered. Intrigued? Visit our Engineering career page to find out about our open positions and learn about Digital by Design.

React Native Skia—For Us, For You, and For Fun

Right now, you are likely reading this content on a Skia surface. It powers Chrome, Android, Flutter, and others. Skia is a cross-platform drawing library that provides a set of drawing primitives that you can trust to run anywhere: iOS, Android, macOS, Windows, Linux, the browser, and now React Native.

Our goal with this project is twofold. First we want to provide React Native, which is notorious for its limited graphical capabilities, with a set of powerful 2D drawing primitives that are consistent across iOS, Android, and the Web. Second is to bridge the gap between graphic designers and React Native: by providing the same UI capabilities as a tool like Figma. Everyone can now speak the same language.

To bring the Skia library to React Native, we needed to rely on the new React Native architecture, JavaScript Interface (JSI). This new API enables direct communication between JavaScript and native modules using C++ instead of asynchronous messages between the two worlds. JSI allows us to expose the Skia API directly in the following way:

We are making this API virtually 100% compatible with the Flutter API allowing us to do two things:

- Leverage the completeness and conciseness of their drawing API

- Eventually provide react-native-web support for Skia using CanvasKit, the Skia WebAssembly build used by Flutter for its web apps.

React is all about declarative UIs, so we are also providing a declarative API built on top of the imperative one. The example above can also be written as:

This API allows us to provide an impressive level of composability to express complex drawings, and it allows us to perform declarative optimizations. We leverage the React Reconciler to perform the work of diffing the internal representation states, and we pass the differences through to the Skia engine.

React Native Skia offers a wide range of APIs such as advanced image filters, shaders, SVG, path operations, vertices, and text layouts. The demo below showcases a couple of drawing primitives previously unavailable in the React Native ecosystem. Each button contains a drop and inner shadow, the progress bar is rendered with an angular gradient, and the bottom sheet uses a backdrop filter to blur the content below it.

Gang, please use those shadows responsibly 😉 pic.twitter.com/3TlIXbiMBc

— William Candillon (@wcandillon) March 21, 2022

Below is an example of mesh gradients done using React Native Skia

In last week's tutorial, we looked at two ways of building mesh gradients with React Native Skia. The first one using vertices. pic.twitter.com/tBt2O3lkT3

— William Candillon (@wcandillon) April 7, 2022

Reanimated 2 (a project also supported by Shopify) brought to life the vision of React Native developers writing animations directly in JavaScript code by running it on a dedicated thread. Animations in React native Skia work the same way. Below is an example of animation in Skia:

If your drawing animation depends on an outside view, like a React Native gesture handler, for instance, we also provide a direct connector to Reanimated 2.