Data Science & Engineering

At Shopify, we’re empowering our platform to make great decisions quickly. Learn how we’re building better, smarter, and faster products through Data Engineering.

ShopifyQL Notebooks: Simplifying Querying with Commerce Data Models

The Complex Data Models Behind Shopify's Tax Insights Feature

Monte Carlo Simulations: Separating Signal from Noise in Sampled Success Metrics

Usually, when you set success metrics you’re able to directly measure the value of interest in its entirety. For example, Shopify can measure Gross Merchandise Volume (GMV) with precision because we can query our databases for every order we process. However, sometimes the information that tells you whether you’re having an impact isn’t available, or is too expensive or time consuming to collect. In these cases, you'll need to rely on a sampled success metric.

In a one-shot experiment, you can estimate the sample size you’ll need to achieve a given confidence interval. However, success metrics are generally tracked over time, and you'll want to evaluate each data point in the context of the trend, not in isolation. Our confidence in our impact on the metric is cumulative. So, how do you extract the success signal from sampling noise? That's where a Monte Carlo Simulation comes in.

A Monte Carlo simulation can be used to understand the variability of outcomes in response to variable inputs. Below, we’ll detail how to use a Monte Carlo simulation to identify the data points you need for a trusted sampled success metric. We’ll walkthrough an example and share how to implement this in Python and pandas so you can do it yourself.

What is a Monte Carlo Simulation?

A Monte Carlo simulation can be used to generate a bunch of random inputs based on real world assumptions. It does this by feeding these inputs through a function that approximates the real world situation of interest, and observing the attributes of the output to understand the likelihood of possible outcomes given reasonable scenarios.

In the context of a sampled success metric, you can use the simulation to understand the tradeoff between:

- Your sample size

- Your ability to extract trends in the underlying population metric from random noise

These results can then be used to explain complex statistical concepts to your non-technical stakeholders. How? You'll be able to simply explain the percentage of certainty your sample size yields, against the cost of collecting more data.

Using a Monte Carlo Simulation to Estimate Metric Variability

To show you how to use a Monte Carlo simulation for a sampled success metric, we'll turn to the Shopify App Store as an example. The Shopify App Store is a marketplace where our merchants can find apps and plugins to customize their store. We have over 8,000 apps solving a range of problems. We set a high standard for app quality, with over 200 minimum requirements focused on security, functionality, and ease of use. Each app needs to meet these requirements in order to be listed, and we have various manual and automated app review processes to ensure these requirements are met.

We want to continuously evaluate how our review processes are improving the quality of our app store. At the highest level, the question we want to answer is, “How good are our apps?”. This can be represented quantitatively as, “How many requirements does the average app violate?”. With thousands of apps in our app store, we can’t check every app, every day. But we can extrapolate from a sample.

By auditing randomly sampled apps each month, we can estimate a metric that tells us how many requirement violations merchants experience with the average installed app—we call this metric the shop issue rate. We can then measure against this metric each month to see whether our various app review processes are having an impact on improving the quality of our apps. This is our sampled success metric.

With mock data and parameters, we’ll show you how we can use a Monte Carlo simulation to identify how many apps we need to audit each month to have confidence in our sampled success metric. We'll then repeatedly simulate auditing randomly selected apps, varying the following parameters:

- Sample size

- Underlying trend in issue rate

To understand the sensitivity of our success metric to relevant parameters, we need to conduct five steps:

- Establish our simulation metrics

- Define the distribution we’re going to draw our issue count from

- Run a simulation for a single set of parameters

- Run multiple simulations for a single set of parameters

- Run multiple simulations across multiple parameters

To use a Monte Carlo simulation, you'll need to have a success metric in mind already. While it’s ideal if you have some idea of its current value and the distribution it’s drawn from, the whole point of the method is to see what range of outcomes emerges from different plausible scenarios. So, don’t worry if you don’t have any initial samples to start with.

Step 1: Establishing Our Simulation Metrics

We start by establishing simulation metrics. These are different from our success metric as they describe the variability of our sampled success metric. Metrics on metrics!

For our example, we'll want to check on this metric on a monthly basis to understand whether our approach is working. So, to establish our simulation metric, we ask ourselves, “Assuming we decrease our shop issue rate in the population by a given amount per month, in how many months would our metric decrease?”. Let’s call this bespoke metric: 1 month decreases observed or 1mDO.

We can also ask this question over longer time periods, like two consecutive months (2mDO) or a full quarter (1qDO). As we make plans on an annual basis, we’ll want to simulate these metrics for one year into the future.

On top of our simulation metric, we’ll also want to measure the mean absolute percentage error (MAPE). MAPE will help us identify the percentage by which the shop issue rate departs from the true underlying distribution each month.

Now, with our simulation metrics established, we need to define what distribution we're going to be pulling from.

Step 2: Defining Our Sampling Distribution

For the purpose of our example, let’s say we’re going to generate a year’s worth of random app audits, assuming a given monthly decrease in the population shop issue rate (our success metric). We’ll want to compare the sampled shop issue rate that our Monte Carlo simulation generates to that of the population that generated it.

We generate our Monte Carlo inputs by drawing from a random distribution. For our example, we've identified that the number of issues an app has is well represented by the Poisson distribution which models the sum of a collection of independent Bernoulli trials (where the evaluation of each requirement can be considered as an individual trial). However, your measure of interest might match another, like the normal distribution. You can find more information about fitting the right distribution to your data here.

The Poisson distribution has only one parameter, λ (lambda), which ends up being both the mean and the variance of the population. For a normal distribution, you’ll need to specify both the population mean and the variance.

Hopefully you already have some sample data you can use to estimate these parameters. If not, the code we’ll work through below will allow you to test what happens under different assumptions.

Step 3: Running Our Simulation with One Set of Parameter Values

Remember, the goal is to quantify how much the sample mean will differ from the underlying population mean given a set of realistic assumptions, using your bespoke simulation metrics.

We know that one of the parameters we need to set is Poisson’s λ. We also assume that we’re going to have a real impact on our metric every month. We’ll want to specify this as a percentage by which we’re going to decrease the λ (or mean issue count) each month.

Finally, we need to set how many random audits we’re going to conduct (aka our sample size). As the sample size goes up, so does the cost of collection. This is a really important number for stakeholders. We can use our results to help communicate the tradeoff between certainty of the metric versus the cost of collecting the data.

Now, we’re going to write the building block function that generates a realistic sampled time series given some assumptions about the parameters of the distribution of app issues. For example, we might start with the following assumptions:

- Our population mean is 10 issues per install. This is our λ parameter.

- Our shop issue rate decreases 5 percent per month. This is how much of an impact we expect our app review processes to have.

Note that these assumptions could be wrong, but the goal is not to get your assumptions right. We’re going to try lots of combinations of assumptions in order to understand how our simulation metrics respond across reasonable ranges of input parameters.

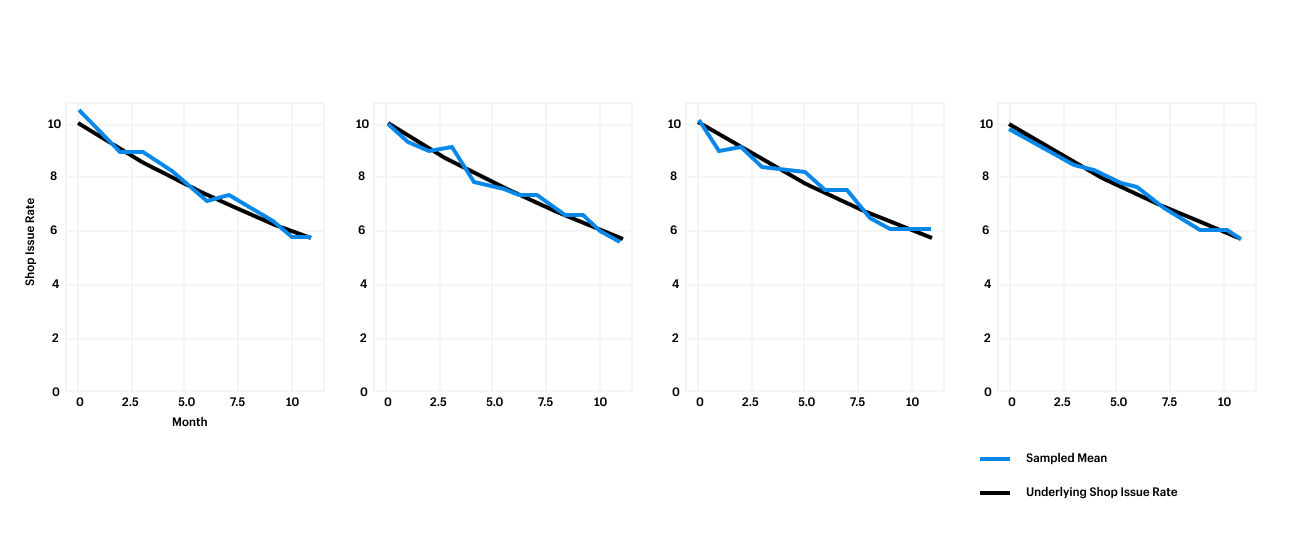

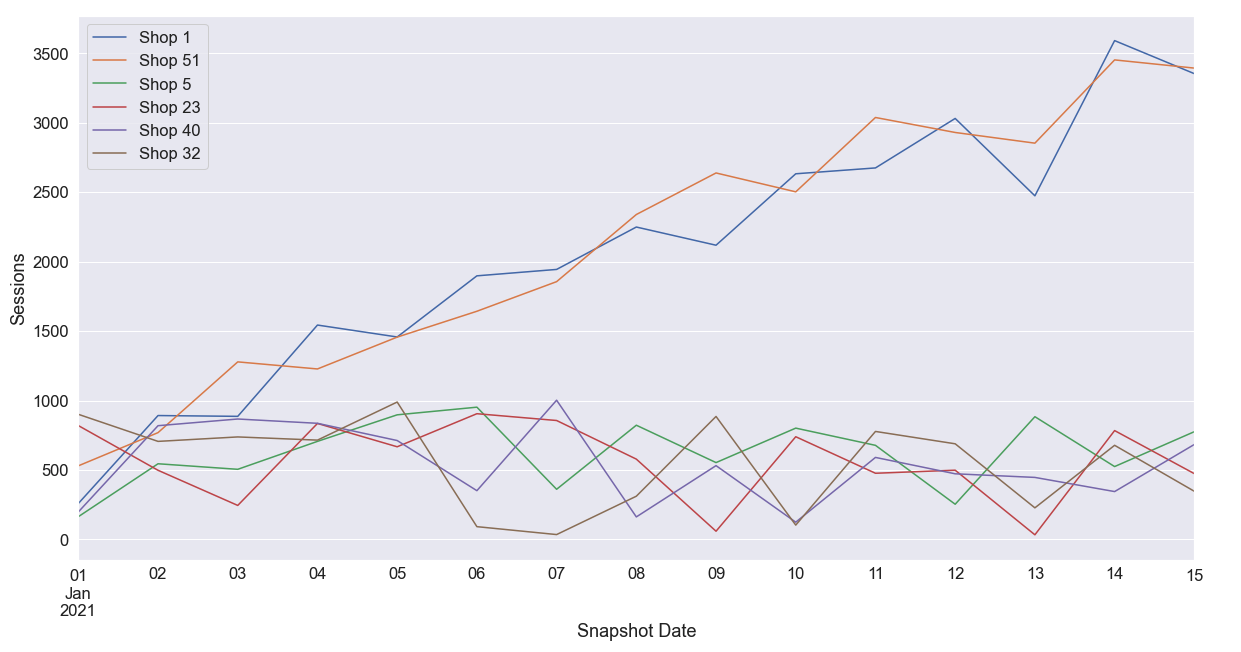

For our first simulation, we’ll start with a function that generates a time series of issue counts, drawn from a distribution of apps where the population issue rate is in fact decreasing by a given percentage per month. For this simulation, we’ll draw from 100 sample time series. This sample size will provide us with a fairly stable estimate of our simulation metrics, without taking too long to run. Below is the output of the simulation:

This function returns a sample dataset of n=audits_per_period apps over m=periods months, where the number of issues for each app is drawn from a Poisson distribution. In the chart below, you can see how the sampled shop issue rate varies around the true underlying number. We can see 10 mean issues decreasing 5 percent every month.

Now that we’ve run our first simulation, we can calculate our variability metrics MAPE and 1mDO. The below code block will calculate our variability metrics for us:

This code will tell us how many months it will take before we actually see a decrease in our shop issue rate. Interpreted another way, "How long do we need to wait to act on this data?".

In this first simulation, we found that the MAPE was 4.3 percent. In other words, the simulated shop issue rate differed from the population mean by 4.3 percent on average. Our 1MDO was 72 percent, meaning our sampled metric decreased in 72 percent of months. These results aren’t great, but was it a fluke? We’ll want to run a few more simulations to identify confidence in your simulation metrics.

Step 4: Running Multiple Simulations with the Same Parameter Values

The code below runs our generate_time_series function n=iterations times with the given parameters, and returns a DataFrame of our simulation metrics for each iteration. So, if we run this with 50 iterations, we'll get back 50 time series, each with 100 sampled audits per month. By averaging across iterations, we can find the averages of our simulation metrics.

Now, the number of simulations to run depends on your use case, but 50 is a good place to start. If you’re simulating a manufacturing process where millimeter precision is important, you’ll want to run hundreds or thousands of iterations. These iterations are cheap to run, so increasing the iteration count to improve your precision just means they’ll take a little while longer to complete.

For our example, 50 sampled time series enables us with enough confidence that these metrics represent the true variability of the shop issue rate. That is, as long as our real world inputs are within the range of our assumptions.

Step 5: Running Simulations Across Combinations of Parameter Values

Now that we’re able to get representative certainty for our metrics for any set of inputs, we can run simulations across various combinations of assumptions. This will help us understand how our variability metrics respond to changes in inputs. This approach is analogous to the grid search approach to hyperparameter tuning in machine learning. Remember, for our app store example, we want to identify the impact of our review processes on the metric for both the monthly percentage decrease and monthly sample size.

We'll use the code below to specify a reasonable range of values for the monthly impact on our success metric, and some possible sample sizes. We'll then run the run_simulation function across those ranges. This code is designed to allow us to search across any dimension. For example, we could replace the monthly decrease parameter with the initial mean issue count. This allows us to understand the sensitivity of our metrics across more than two dimensions.

The simulation will produce a range of outcomes. Looking at our results below, we can tell our stakeholders that if we start at 10 average issues per audit, run 100 random audits per month, and decrease the underlying issue rate by 5 percent each month, we should see monthly decreases in our success metric 83 percent of the time. Over two months, we can expect to see a decrease 97 percent of the time.

With our simulations, we're able to clearly express the uncertainty tradeoff in terms that our stakeholders can understand and implement. For example, we can look to our results and communicate that an additional 50 audits per month would yield quantifiable improvements in certainty. This insight can enable our stakeholders to make an informed decision about whether that certainty is worth the additional expense.

And there we have it! The next time you're looking to separate signal from noise in your sampled success metric, try using a Monte Carlo simulation. This fundamental guide just scratches the surface of this complex problem, but it's a great starting point and I hope you turn to it in the future.

Tom is a data scientist working on systems to improve app quality at Shopify. In his career, he tried product management, operations and sales before figuring out that SQL is his love language. He lives in Brooklyn with his wife and enjoys running, cycling and writing code.

Are you passionate about solving data problems and eager to learn more about Shopify? Check out openings on our careers page.

3 (More) Tips for Optimizing Apache Flink Applications

By Kevin Lam and Rafael Aguiar

At Shopify, we’ve adopted Apache Flink as a standard stateful streaming engine that powers a variety of use cases. Earlier this year, we shared our tips for optimizing large stateful Flink applications. Below we’ll walk you through 3 more best practices.

1. Set the Right Parallelism

A Flink application consists of multiple tasks, including transformations (operators), data sources, and sinks. These tasks are split into several parallel instances for execution and data processing.

Parallelism refers to the parallel instances of a task and is a mechanism that enables you to scale in or out. It's one of the main contributing factors to application performance. Increasing parallelism allows an application to leverage more task slots, which can increase the overall throughput and performance.

Application parallelism can be configured in a few different ways, including:

- Operator level

- Execution environment level

- Client level

- System level

The configuration choice really depends on the specifics of your Flink application. For instance, if some operators in your application are known to be a bottleneck, you may want to only increase the parallelism for that bottleneck.

We recommend starting with a single execution environment level parallelism value and increasing it if needed. This is a good starting point as task slot sharing allows for better resource utilization. When I/O intensive subtasks block, non I/O subtasks can make use of the task manager resources.

A good rule to follow when identifying parallelism is:

The number of task managers multiplied by the number of tasks slots in each task manager must be equal (or slightly higher) to the highest parallelism value

For example, when using parallelism of 100 (either defined as a default execution environment level or at a specific operator level), you would need to run 25 task managers, assuming each task manager has four slots: 25 x 4 = 100.

2. Avoid Sink Bottlenecks

Data pipelines usually have one or more data sinks (destinations like Bigtable, Apache Kafka, and so on) which can sometimes become bottlenecks in your Flink application. For example, if your target Bigtable instance has high CPU utilization, it may start to affect your Flink application due to Flink being unable to keep up with the write traffic. You may not see any exceptions, but decreased throughput all the way to your sources. You’ll also see backpressure in the Flink UI.

When sinks are the bottleneck, the backpressure will propagate to all of its upstream dependencies, which could be your entire pipeline. You want to make sure that your sinks are never the bottleneck!

In cases where latency can be sacrificed a little, it’s useful to combat bottlenecks by first batch writing to the sink in favor of higher throughput. A batch write request is the process of collecting multiple events as a bundle and submitting those to the sink at once, rather than submitting one event at a time. Batch writes will often lead to better compression, lower network usage, and smaller CPU hit on the sinks. See Kafka’s batch.size property, and Bigtable’s bulk mutations for examples.

You’ll also want to check and fix any data skew. In the same Bigtable example, you may have heavily skewed keys which will affect a few of Bigtable’s hottest nodes. Flink uses keyed streams to scale out to nodes. The concept involves the events of a stream being partitioned according to a specific key. Flink then processes different partitions on different nodes.

KeyBy is frequently used to re-key a DataStream in order to perform aggregation or a join. It’s very easy to use, but it can cause a lot of problems if the chosen key isn’t properly distributed. For example, at Shopify, if we were to choose a shop ID as our key, it wouldn’t be ideal. A shop ID is the identifier of a single merchant shop on our platform. Different shops have very different traffic, meaning some Flink task managers would be busy processing data, while the others would stay idle. This could easily lead to out-of-memory exceptions and other failures. Low cardinality IDs (< 100) are also problematic because it’s hard to distribute them properly amongst the task managers.

But what if you absolutely need to use a less than ideal key? Well, you can apply a bucketing technique:

- Choose a maximum number (start with a number smaller than or equal to the operator parallelism)

- Randomly generate a value between 0 and the max number

- Append it to your key before keyBy

By applying a bucketing technique, your processing logic is better distributed (up to the maximum number of additional buckets per key). However, you need to come up with a way to combine the results in the end. For instance, if after processing all your buckets you find the data volume is significantly reduced, you can keyBy the stream by your original “less than ideal” key without creating problematic data skew. Another approach could be to combine your results at query time, if your query engine supports it.

3. Use HybridSource to Combine Heterogeneous Sources

Let’s say you need to abstract several heterogeneous data sources into one, with some ordering. For example, at Shopify a large number of our Flink applications read and write to Kafka. In order to save costs associated with storage, we enforce per-topic retention policies on all our Kafka topics. This means that after a certain period of time has elapsed, data is expired and removed from the Kafka topics. Since users may still care about this data after it’s expired, we support configuring Kafka topics to be archived. When a topic is archived, all Kafka data for that topic are copied to a cloud object storage for long-term storage. This ensures it’s not lost when the retention period elapses.

Now, what do we do if we need our Flink application to read all the data associated with a topic configured to be archived, for all time? Well, we could create two sources—one source for reading from the cloud storage archives, and one source for reading from the real-time Kafka topic. But this creates complexity. By doing this, our application would be reading from two points in event time simultaneously, from two different sources. On top of this, if we care about processing things in order, our Flink application has to explicitly implement application logic which handles that properly.

If you find yourself in a similar situation, don’t worry there’s a better way! You can use HybridSource to make the archive and real-time data look like one logical source. Using HybridSource, you can provide your users with a single source that first reads from the cloud storage archives for a topic, and then when the archives are exhausted, switches over automatically to the real-time Kafka topic. The application developer only sees a single logical DataStream and they don’t have to think about any of the underlying machinery. They simply get to read the entire history of data.

Using HybridSource to read cloud object storage data also means you can leverage a higher number of input partitions to increase read throughput. While one of our Kafka topics might be partitioned across tens or hundreds of partitions to support enough throughput for live data, our object storage datasets are typically partitioned across thousands of partitions per split (e.g. day) to accommodate for vast amounts of historical data. The superior object storage partitioning, when combined with enough task managers, will allow Flink to blaze through the historical data, dramatically reducing the backfill time when compared to reading the same amount of data straight from an inferiorly partitioned Kafka topic.

Here’s what creating a DataStream using our HybridSource powered KafkaBackfillSource looks like in Scala:

In the code snippet, the KafkaBackfillSource abstracts away the existence of the archive (which is inferred from the Kafka topic and cluster), so that the developer can think of everything as a single DataStream.

HybridSource is a very powerful construct and should definitely be considered if you need your Flink application to read several heterogeneous data sources in an ordered format.

And there you go! 3 more tips for optimizing large stateful Flink applications. We hope you enjoyed our key learnings and that they help you out when implementing your own Flink applications. If you’re looking for more tips and haven’t read our first blog, make sure to check them out here.

Kevin Lam works on the Streaming Capabilities team under Production Engineering. He's focused on making stateful stream processing powerful and easy at Shopify. In his spare time he enjoys playing musical instruments, and trying out new recipes in the kitchen.

Rafael Aguiar is a Senior Data Engineer on the Streaming Capabilities team. He is interested in distributed systems and all-things large scale analytics. When he is not baking some homemade pizza he is probably lost outdoors. Follow him on Linkedin.

Interested in tackling the complex problems of commerce and helping us scale our data platform? Join our team.

Using Server Sent Events to Simplify Real-time Streaming at Scale

When building any kind of real-time data application, trying to figure out how to send messages from the server to the client (or vice versa) is a big part of the equation. Over the years, various communication models have popped up to handle server-to-client communication, including Server Sent Events (SSE).

SSE is a unidirectional server push technology that enables a web client to receive automatic updates from a server via an HTTP connection. With SSE data delivery is quick and simple because there’s no periodic polling, so there’s no need to temporarily stage data.

This was a perfect addition to a real-time data visualization product Shopify ships every year—our Black Friday Cyber Monday (BFCM) Live Map.

Our 2021 Live Map system was complex and used a polling communication model that wasn’t well suited. While this system had 100 percent uptime, it wasn't without its bottlenecks. We knew we could improve performance and data latency.

Below, we’ll walk through how we implemented an SSE server to simplify our BFCM Live Map architecture and improve data latency. We’ll discuss choosing the right communication model for your use case, the benefits of SSE, and code examples for how to implement a scalable SSE server that’s load-balanced with Nginx in Golang.

Choosing a Real-time Communication Model

First, let’s discuss choosing how to send messages. When it comes to real-time data streaming, there are three communication models:

- Push: This is the most real-time model. The client opens a connection to the server and that connection remains open. The server pushes messages and the client waits for those messages. The server manages a registry of connected clients to push data to. The scalability is directly related to the scalability of this registry.

- Polling: The client makes a request to the server and gets a response immediately, whether there's a message or not. This model can waste bandwidth and resources when there are no new messages. While this model is the easiest to implement, it doesn’t scale well.

- Long polling: This is a combination of the two models above. The client makes a request to the server, but the connection is kept open until a response with data is returned. Once a response with new data is returned, the connection is closed.

No model is better than the other, it really depends on the use case.

Our use case is the Shopify BFCM Live Map, a web user interface that processes and visualizes real-time sales made by millions of Shopify merchants over the BFCM weekend. The data we’re visualizing includes:

- Total sales per minute

- Total number of orders per minute

- Total carbon offset per minute

- Total shipping distance per minute

- Total number of unique shoppers per minute

- A list of latest shipping orders

- Trending products

BFCM is the biggest data moment of the year for Shopify, so streaming real-time data to the Live Map is a complicated feat. Our platform is handling millions of orders from our merchants. To put that scale into perspective, during BFCM 2021 we saw 323 billion rows of data ingested by our ingestion service.

For the BFCM Live Map to be successful, it requires a scalable and reliable pipeline that provides accurate, real-time data in seconds. A crucial part of that pipeline is our server-to-client communication model. We need something that can handle both the volume of data being delivered, and the load of thousands of people concurrently connecting to the server. And it needs to do all of this quickly.

Our 2021 BFCM Live Map delivered data to a presentation layer via WebSocket. The presentation layer then deposited data in a mailbox system for the web client to periodically poll, taking (at minimum) 10 seconds. In practice, this worked but the data had to travel a long path of components to be delivered to the client.

Data was provided by a multi-component backend system consisting of a Golang based application (Cricket) using a Redis server and a MySQL database. The Live Map’s data pipeline consisted of a multi-region, multi-job Apache Flink based application. Flink processed source data from Apache Kafka topics and Google Cloud Storage (GCS) parquet-file enrichment data to produce into other Kafka topics for Cricket to consume.

While this got the job done, the complex architecture caused bottlenecks in performance. In the case of our trending products data visualization, it could take minutes for changes to become available to the client. We needed to simplify in order to improve our data latency.

As we approached this simplification, we knew we wanted to deprecate Cricket and replace it with a Flink-based data pipeline. We’ve been investing in Flink over the past couple of years, and even built our streaming platform on top of it—we call it Trickle. We knew we could leverage these existing engineering capabilities and infrastructure to streamline our pipeline.

With our data pipeline figured out, we needed to decide on how to deliver the data to the client. We took a look at how we were using WebSocket and realized it wasn’t the best tool for our use case.

Server Sent Events Versus WebSocket

WebSocket provides a bidirectional communication channel over a single TCP connection. This is great to use if you’re building something like a chat app, because both the client and the server can send and receive messages across the channel. But, for our use case, we didn’t need a bidirectional communication channel.

The BFCM Live Map is a data visualization product so we only need the server to deliver data to the client. If we continued to use WebSocket it wouldn’t be the most streamlined solution. SSE on the other hand is a better fit for our use case. If we went with SSE, we’d be able to implement:

- A secure uni-directional push: The connection stream is coming from the server and is read-only.

- A connection that uses ubiquitously familiar HTTP requests: This is a benefit for us because we were already using a ubiquitously familiar HTTP protocol, so we wouldn’t need to implement a special esoteric protocol.

- Automatic reconnection: If there's a loss of connection, reconnection is automatically retried after a certain amount of time.

But most importantly, SSE would allow us to remove the process of retrieving, processing, and storing data on the presentation layer for the purpose of client polling. With SSE, we would be able to push the data as soon as it becomes available. There would be no more polls and reads, so no more delay. This, paired with a new streamlined pipeline, would simplify our architecture, scale with peak BFCM volumes and improve our data latency.

With this in mind, we decided to implement SSE as our communication model for our 2022 Live Map. Here’s how we did it.

Implementing SSE in Golang

We implemented an SSE server in Golang that subscribes to Kafka topics and pushes the data to all registered clients’ SSE connections as soon as it’s available.

A real-time streaming Flink data pipeline processes raw Shopify merchant sales data from Kafka topics. It also processes periodically-updated product classification enrichment data on GCS in the form of compressed Apache Parquet files. These are then computed into our sales and trending product data respectively and published into Kafka topics.

Here’s a code snippet of how the server registers an SSE connection:

Subscribing to the SSE endpoint is simple with the EventSource interface. Typically, client code creates a native EventSource object and registers an event listener on the object. The event is available in the callback function:

When it came to integrating the SSE server to our frontend UI, the UI application was expected to subscribe to an authenticated SSE server endpoint to receive data. Data being pushed from the server to client is publicly accessible during BFCM, but the authentication enables us to control access when the site is no longer public. Pre-generated JWT tokens are provided to the client by the server that hosts the client for the subscription. We used the open-sourced EventSourcePolyfill implementation to pass an authorization header to the request:

Once subscribed, data is pushed to the client as it becomes available. Data is consistent with the SSE format, with the payload being a JSON parsable by the client.

Ensuring SSE Can Handle Load

Our 2021 system struggled under a large number of requests from user sessions at peak BFCM volume due to the message bus bottleneck. We needed to ensure our SSE server could handle our expected 2022 volume.

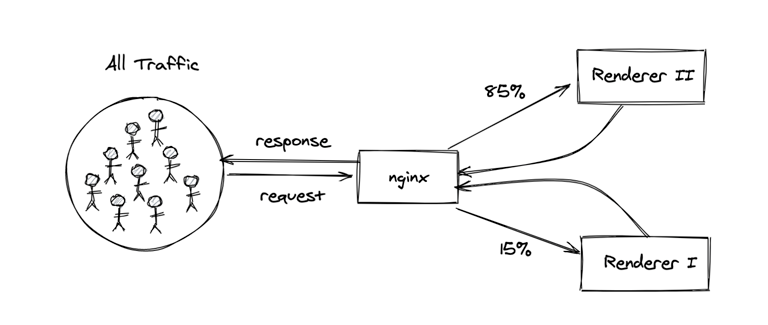

With this in mind, we built our SSE server to be horizontally scalable with the cluster of VMs sitting behind Shopify’s NGINX load-balancers. As the load increases or decreases, we can elastically expand and reduce our cluster size by adding or removing pods. However, it was essential that we determined the limit of each pod so that we could plan our cluster accordingly.

One of the challenges of operating an SSE server is determining how the server will operate under load and handle concurrent connections. Connections to the client are maintained by the server so that it knows which ones are active, and thus which ones to push data to. This SSE connection is implemented by the browser, including the retry logic. It wouldn’t be practical to open tens of thousands of true browser SSE connections. So, we need to simulate a high volume of connections in a load test to determine how many concurrent users one single server pod can handle. By doing this, we can identify how to scale out the cluster appropriately.

We opted to build a simple Java client that can initiate a configurable amount of SSE connections to the server. This Java application is bundled into a runnable Jar that can be distributed to multiple VMs in different regions to simulate the expected number of connections. We leveraged the open-sourced okhttp-eventsource library to implement this Java client.

Here’s the main code for this Java client:

Did SSE Perform Under Pressure?

With another successful BFCM in the bag, we can confidently say that implementing SSE in our new streamlined pipeline was the right move. Our BFCM Live Map saw 100 percent uptime. As for data latency in terms of SSE, data was delivered to clients within milliseconds of its availability. This was much improved from the minimum 10 second poll from our 2021 system. Overall, including the data processing in our Flink data pipeline, data was visualized on the BFCM’s Live Map UI within 21 seconds of its creation time.

We hope you enjoyed this behind the scenes look at the 2022 BFCM Live Map and learned some tips and tricks along the way. Remember, when it comes to choosing a communication model for your real-time data product, keep it simple and use the tool best suited for your use case.

Bao is a Senior Staff Data Engineer who works on the Core Optimize Data team. He's interested in large-scale software system architecture and development, big data technologies and building robust, high performance data pipelines.

Our platform handled record-breaking sales over BFCM and commerce isn't slowing down. Want to help us scale and make commerce better for everyone? Join our team.

How to Export Datadog Metrics for Exploration in Jupyter Notebooks

"Is there a way to extract Datadog metrics in Python for in-depth analysis?"

This question has been coming up a lot at Shopify recently, so I thought detailing a step-by-step guide might be useful for anyone going down this same rabbit hole.

Follow along below to learn how to extract data from Datadog and build your analysis locally in Jupyter Notebooks.

Why Extract Data from Datadog?

As a quick refresher, Datadog

So, why would you ever need Datadog metrics to be extracted?

There are two main reasons why someone may prefer to extract the data locally rather than using Datadog:

- Limitation of analysis: Datadog has a limited set of visualizations that can be built and it doesn't have the tooling to perform more complex analysis (e.g. building statistical models).

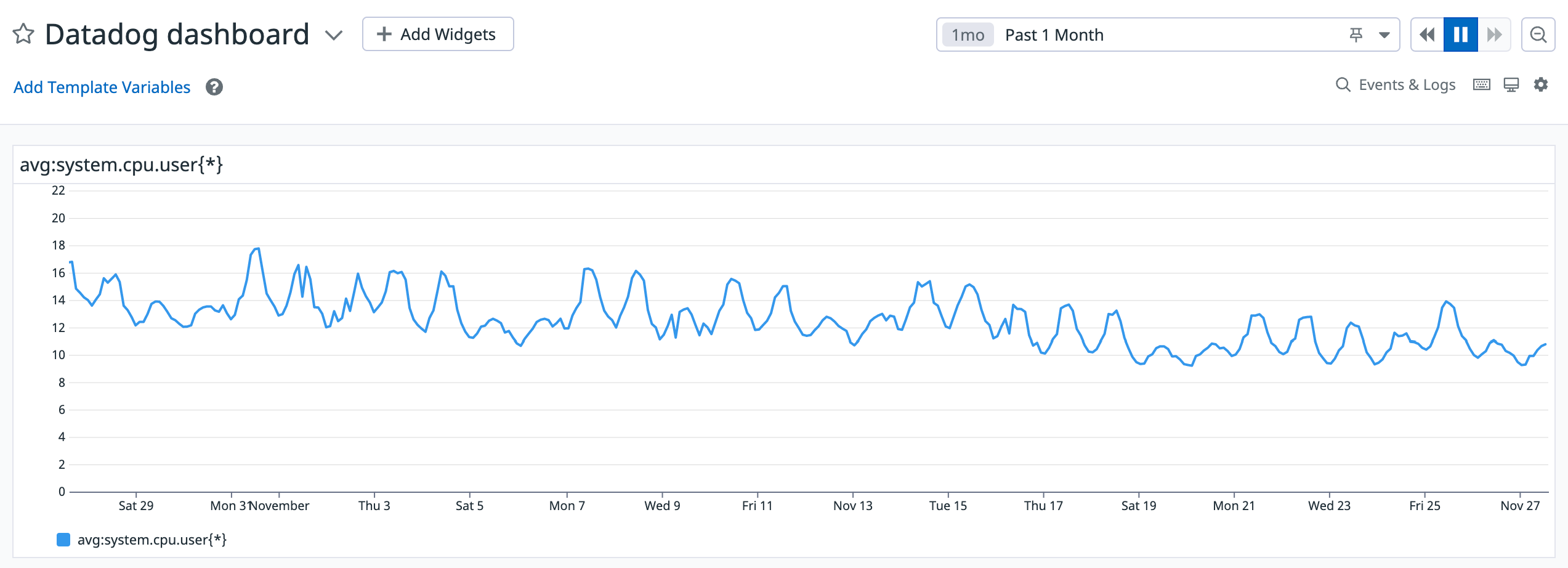

- Granularity of data: Datadog dashboards have a fixed width for the visualizations, which means that checking metrics across a larger time frame will make the metric data less granular. For example, the below image shows a Datadog dashboard capturing a 15 minute span of activity, which generates metrics on a 1 second interval:

Comparatively, the below image shows a Datadog dashboard that captures a 30 day span of activity, which generates metrics on a 2 hour interval:

As you can see, Datadog visulaizes an aggregated trend in the 2 hour window, which means it smoothes (hides) any interesting events. For those reasons, someone may prefer to extract the data manually from Datadog to run their own analysis.

How to Extract Data and Build Your Own analysis

For the purposes of this blog, we’ll be running our analysis in Jupyter notebooks. However, feel free to use your own preferred tool for working with Python.

Datadog has a REST API which we’ll use to extract data from.

In order to extract data from Datadog's API, all you need are two things :

- API credentials: You’ll need credentials (an API key and an APP key) to interact with the datadog API.

- Metric query: You need a query to execute in Datadog. For the purposes of this blog, let’s say we wanted to track the CPU utilization over time.

Once you have the above two requirements sorted, you’re ready to dive into the data.

Step 1: Initiate the required libraries and set up your credentials for making the API calls:

Step 2: Specify the parameters for time-series data extraction. Below we’re setting the time period from Tuesday, November 22, 2022 at 16:11:49 GMT to Friday, November 25, 2022 at 16:11:49 GMT:

One thing to keep in mind is that Datadog has a rate limit of API requests. In case you face rate issues, try increasing the “time_delta” in the query above to reduce the number of requests you make to the Datadog API.

Step 3: Run the extraction logic. Take the start and the stop timestamp and split them into buckets of width = time_delta.

Next, make calls to the Datadog API for the above bucketed time windows in a for loop. For each call, append the data you extracted for bucketed time frames to a list.

Lastly, convert the lists to a dataframe and return it:

Step 4: Voila, you have the data! Looking at the below mock data table, this data will have more granularity compared to what is shown in Datadog.

Now, we can use this to visualize data using any tool we want. For example, let’s use seaborn to look at the distribution of the system’s CPU utilization using KDE plots:

As you can see below, this visualization provides a deeper insight.

And there you have it. A super simple way to extract data from Datadog for exploration in Jupyter notebooks.

Kunal is a data scientist on the Shopify ProdEng data science team, working out of Niagara Falls, Canada. His team helps make Shopify’s platform performant, resilient and secure. In his spare time, Kunal enjoys reading about tech stacks, working on IoT devices and spending time with his family.

Are you passionate about solving data problems and eager to learn more about Shopify? Check out openings on our careers page.

Reducing BigQuery Costs: How We Fixed A $1 Million Query

During the infrastructural exploration of a pipeline my team was building, we discovered a query that could have cost us nearly $1 million USD a month in BigQuery. Below, we’ll detail how we reduced this and share our tips for lowering costs in BigQuery.

Processing One Billion Rows of Data

My team was responsible for building a data pipeline for a new marketing tool we were shipping to Shopify merchants. We built our pipeline with Apache Flink and launched the tool in an early release to a select group of merchants. Fun fact: this pipeline became one of the first productionized Flink pipelines at Shopify. During the early release, our pipeline ingested one billion rows of data into our Flink pipeline's internal state (managed by RocksDB), and handled streaming requests from Apache Kafka.

We wanted to take the next step by making the tool generally available to a larger group of merchants. However, this would mean a significant increase in the data our Flink pipeline would be ingesting. Remember, our pipeline was already ingesting one billion rows of data for a limited group of merchants. Ingesting an ever-growing dataset wouldn’t be sustainable.

As a solution, we looked into a SQL-based external data warehouse. We needed something that our Flink pipeline could submit queries to and that could write back results to Google Cloud Storage (GCS). By doing this, we could simplify the current Flink pipeline dramatically by removing ingestion, ensuring we have a higher throughput for our general availability launch.

The external data warehouse needed to meet the following three criteria:

- Atomically load the parquet dataset easily

- Handle 60 requests per minute (our general availability estimation) without significant queuing or waiting time

- Export the parquet dataset to GCS easily

The first query engine that came to mind was BigQuery. It’s a data warehouse that can both store petabytes of data and query those datasets within seconds. BigQuery is fully managed by Google Cloud Platform and was already in use at Shopify. We knew we could load our one billion row dataset into BigQuery and export query results into GCS easily. With all of this in mind, we started the exploration but we met an unexpected obstacle: cost.

A Single Query Would Cost Nearly $1 Million

As mentioned above, we’ve used BigQuery at Shopify, so there was an existing BigQuery loader in our internal data modeling tool. So, we easily loaded our large dataset into BigQuery. However, when we first ran the query, the log showed the following:

total bytes processed: 75462743846, total bytes billed: 75462868992

That roughly translated to 75 GB billed from the query. This immediately raised an alarm because BigQuery is charged by data processed per query. If each query were to scan 75 GB of data, how much would it cost us at our general availability launch?

I quickly did some rough math. If we estimate 60 RPM at launch, then:

60 RPM x 60 minutes/hour x 24 hours/day x 30 days/month = 2,592,000 queries/month

If each query scans 75 GB of data, then we’re looking at approximately 194,400,000 GB of data scanned per month. According to BigQuery’s on-demand pricing scheme, it would cost us $949,218.75 USD per month!

Clustering to the Rescue

With the estimation above, we immediately started to look for solutions to reduce this monstrous cost.

We knew that clustering our tables could help reduce the amount of data scanned in BigQuery. As a reminder, clustering is the act of sorting your data based on one or more columns in your table. You can cluster columns in your table by fields like DATE, GEOGRAPHY, TIMESTAMP, ect. You can then have BigQuery scan only the clustered columns you need.

With clustering in mind, we went digging and discovered several condition clauses in the query that we could cluster. These were ideal because if we clustered our table with columns appearing in WHERE clauses, we could apply filters in our query that would ensure only specific conditions are scanned. The query engine will stop scanning once it finds those conditions, ensuring only the relevant data is scanned instead of the entire table. This reduces the amount of bytes scanned and would save us a lot of processing time.

We created a clustered dataset on two feature columns from the query’s WHERE clause. We then ran the exact same query and the log now showed 508.1 MB billed. That’s 150 times less data scanned than the previous unclustered table.

With our newly clustered table, we identified that the query would now only scan 108.3 MB of data. Doing some rough math again:

2,592,000 queries/month x 0.1 GB of data = 259,200 GB of data scanned/month

That would bring our cost down to approximately $1,370.67 USD per month, which is way more reasonable.

Other Tips for Reducing Cost

While all it took was some clustering for us to significantly reduce our costs, here are a few other tips for lowering BigQuery costs:

-

Avoid the

SELECT*statement: Only select the columns in the table you need queried. This will limit the engine scan to only those columns, therefore limiting your cost. - Partition your tables: This is another way to restrict the data scanned by dividing your table into segments (aka partitions). You can create partitions in BigQuery based on time-units, ingestion time or integer range.

- Don’t run queries to explore or preview data: Doing this would be an unnecessary cost. You can use table preview options to view data for free.

And there you have it. If you’re working with a high volume of data and using BigQuery, following these tips can help you save big. Beyond cost savings, this is critical for helping you scale your data architecture.

Calvin is a senior developer at Shopify. He enjoys tackling hard and challenging problems, especially in the data world. He’s now working with the Return on Ads Spend group in Shopify. In his spare time, he loves running, hiking and wandering in nature. He is also an amateur Go player.

Are you passionate about solving data problems and eager to learn more about Shopify? Check out openings on our careers page.

How to Structure Your Data Team for Maximum Influence

One of the biggest challenges most managers face (in any industry) is trying to assign their reports work in an efficient and effective way. But as data science leaders—especially those in an embedded model—we’re often faced with managing teams with responsibilities that traverse multiple areas of a business. This juggling act often involves different streams of work, areas of specialization, and stakeholders. For instance, my team serves five product areas, plus two business areas. Without a strategy for dealing with these stakeholders and related areas of work, we risk operational inefficiency and chaotic outcomes.

There are many frameworks out there that suggest the most optimal way to structure a team for success. Below, we’ll review these frameworks and their positives and negatives when applied to a data science team. We’ll also share the framework that’s worked best for empowering our data science teams to drive impact.

First, Some Guiding Principles

Before looking at frameworks for managing these complex team structures, I’ll first describe some effective guiding principles we should use when organizing workflows and teams:

- Efficiency: Any structure must provide an ability to get work done in an efficient and effective manner.

- Influence: Structures must be created in such a way that your data science team continues to have influence on business and product strategies. Data scientists often have input that is critical to business and product success, and we want to create an environment where that input can be given and received.

- Stakeholder clarity: We need to create a structure where stakeholders clearly know who to contact to get work done, and seek help and advice from.

- Stability: Some teams structures can create instability for reports, which leads to a whole host of other problems.

- Growth: If we create structures where reports only deal with stakeholders and reactive issues, it may be difficult for them to develop professionally. We want to ensure reports have time to tackle work that enables them to acquire a depth of knowledge in specific areas.

- Flexibility: Life happens. People quit, need change, or move on. Our team structures need to be able to deal with and recognize that change is inevitable.

Traditional Frameworks for Organizing Data Teams

Alright, now let’s look at some of the more popular frameworks used to organize data teams. While they’re not the only ways to structure teams and align work, these frameworks cover most of the major aspects in organizational strategy.

Swim Lanes

You’ve likely heard of this framework before, and maybe even cringed when someone has told you or your report to "stay in your swim lanes". This framework involves assigning someone to very strictly defined areas of responsibility. Looking at the product and business areas my own team supports as an example, we have seven different groups to support. According to the swim lane framework, I would assign one data scientist to each group. With an assigned product or business group, their work would never cross lanes.

In this framework, there's little expected help or cross-training that occurs, and everyone is allowed to operate with their own fiefdom. I once worked in an environment like this. We were a group of tenured data scientists who didn’t really know what the others were doing. It worked for a while, but when change occurred (new projects, resignations, retirements) it all seemed to fall apart.

Let’s look at this framework’s advantages:

- Distinct areas of responsibility. In this framework, everyone has their own area of responsibility. As a manager, I know exactly who to assign work to and where certain tasks should go on our board. I can be somewhat removed from the process of workload balancing.

- High levels of individual ownership. Reports own an area of responsibility and have a stake in its success. They also know that their reputation and job are on the line for the success or failure of that area.

- The point-of-contact is obvious to stakeholders. Ownership is very clear to stakeholders, so they always know who to go. This model also fosters long-term relationships.

And the disadvantages:

- Lack of cross-training. Individual reports will have very little knowledge of the work or codebase of their peers. This becomes an issue when life happens and we need to react to change.

- Reports can be left on an island. Reports can be left alone which tends to matter more when times are tough. This is a problem for both new reports who are trying to onboard and learn new systems, but also for tenured reports who may suddenly endure a higher workload. Help may not be coming.

- Fails under high-change environments. For the reasons mentioned above, this system fails under high-change environments. It also creates a team-level rigidity that means when general organizational changes happen, it’s difficult to react and pivot.

Referring back to our guiding principles when considering how to effectively organize a date team, this framework hits our stakeholder clarity and efficiency principles, but only in stable environments. Swim lanes often fail in conditions of change or when the team needs to pivot to new responsibilities—something most teams should expect.

Stochastic Process

As data scientists, we’re often educated in the stochastic process and this framework resembles this theory. As a refresher, the stochastic process is defined by randomness of assignment, where expected behavior is near random assignments to areas or categories.

Likewise, in this framework each report takes the next project that pops up, resembling a random assignment of work. However, projects are prioritized and when an employee finishes one project, they take on the next, highest priority project.

This may sound overly random as a system, but I’ve worked on a team like this before. We were a newly setup team, and no one had any specific experience with any of the work we were doing. The system worked well for about six months, but over the course of a year, we felt like we'd been put through the wringer and as though no one had any deep knowledge of what we were working on.

The advantages of this framework are:

- High levels of team collaboration. Everyone is constantly working on each other’s code and projects, so a high-level of collaboration tends to develop.

- Reports feel like there is always help. Since work is assigned in terms of next priority gets the resource, if someone is struggling with a high-priority task, they can just ask for help from the next available resource.

- Extremely flexible under high levels of change. Your organization decides to reorg to align to new areas of the business? No problem! You weren’t aligned to any specific groups of stakeholders to begin with. Someone quits? Again, no problem. Just hire someone new and get them into the rotation.

And the disadvantages:

- Can feel like whiplash. As reports are asked to move constantly from one unrelated project to the next, they can develop feelings of instability and uncertainty (aka whiplash). Additionally, as stakeholders work with a new resource on each project, this can limit the ability to develop rapport.

- Inability to go deep on specialized subject matters. It’s often advantageous for data scientists to dive deep into one area of the business or product. This enables them to develop deep subject area knowledge in order to build better models. If we’re expecting them to move from project to project, this is unlikely to occur.

- Extremely high management inputs. As data scientists become more like cogs in a wheel in this type of framework, management ends up owning most stakeholder relationships and business knowledge. This increases demands on individual managers.

Looking at the advantages and disadvantages of this framework, and measuring them against our guiding principles, this framework only hits two of our principles: flexibility and efficiency. While this framework can work in very specific circumstances (like brand new teams), the lack of stakeholder clarity, relationship building, and growth opportunity will result in the failure of this framework to sufficiently serve the needs of the team and stakeholders.

A New Framework: Diamond Defense

Luckily, we’ve created a third way to organize data teams and work. I like to compare this framework to the concept of diamond defense in basketball. In diamond defense, players have general areas (zones) of responsibility. However, once play starts, the defense focuses on trapping (sending extra resources) to the toughest problems, while helping out areas in the defense that might be left with fewer resources than needed.

This same defense method can be used to structure data teams to be highly effective. In this framework, you loosely assign reports to your product or business areas, but ensure to rotate resources to tough projects and where help is needed.

Referring back to the product and business areas my team supports, you can see how I use this framework to organize my team:

Each data scientist is assigned to a zone. I then aligned our additional business areas (Finance and Marketing) to a product group, and assigned resources to these groupings. Finance and Marketing are aligned differently here because they are not supported by a team of Software Engineers. Instead, I aligned them to the product group that mostly closely resembles their work in terms of data accessed and models built. Currently, Marketing has the highest number of requests for our team, so I added more resources to support this group.

You’ll notice on the chart that I keep myself and an additional data scientist in a bullpen. This is key to the diamond defense as it ensures we always have additional resources to help out where needed. Let’s dive into some examples of how we may use resources in the bullpen:

- DS2 is under-utilized. We simultaneously find out that DS1 is overwhelmed by the work of their product area, so we tap DS2 to help out.

- SR DS1 quits. In this case, we rotate DS4 into their place, and proceed to hire a backfill.

- SR DS2 takes a leave of absence. In this situation, I as the manager slide in to manage SR DS2’s stakeholders. I would then tap DS4 to help out, while the intern who is also assigned to the same area continues to focus on getting their work done with help from DS4.

This framework has several advantages:

- Everyone has dedicated areas to cover and specialize in. As each report is loosely assigned to a zone (specific product or business area), they can go deep and develop specialized skills.

- Able to quickly jump on problems that pop up. Loose assignment to zones enable teams the flexibility to move resources to the highest-priority areas or toughest problems.

- Reports can get the help they need. If a report is struggling with the workload, you can immediately send more resources towards that person to lighten their load.

And the disadvantages:

- Over-rotation. In certain high-change circumstances, a situation can develop where data scientists spend most of their time covering for other people. This can create very volatile and high-risk situations, including turnover.

This framework hits all of our guiding principles. It provides the flexibility and stability needed when dealing with change, it enables teams to efficiently tackle problems, focus areas enable report growth and stakeholder clarity, and relationships between reports and their stakeholders improves the team's ability to influence policies and outcomes.

Conclusion

There are many ways to organize data teams to different business or product areas, stakeholders, and bodies of work. While the traditional frameworks we discussed above can work in the short-term, they tend to over-focus either on rigid areas of responsibility or everyone being able to take on any project.

If you use one of these frameworks and you’re noticing that your team isn’t working as effectively as you know they can, give our diamond defense framework a try. This hybrid framework addresses all the gaps of the traditional frameworks, and ensures:

- Reports have focus areas and growth opportunity

- Stakeholders have clarity on who to go to

- Resources are available to handle any change

- Your data team is set up for long-term success and impact

Every business and team is different, so we encourage you to play around with this framework and identify how you can make it work for your team. Just remember to reference our guiding principles for complex team structures.

Are you passionate about solving data problems and eager to learn more about Shopify? Check out openings on our careers page.

What is a Full Stack Data Scientist?

At Shopify, we've embraced the idea of full stack data science and are often asked, "What does it mean to be a full stack data scientist?". The term has seen a recent surge in the data industry, but there doesn’t seem to be a consensus on a definition. So, we chatted with a few Shopify data scientists to share our definition and experience.

What is a Full Stack Data Scientist?

"Full stack data scientists engage in all stages of the data science lifecycle. While you obviously can’t be a master of everything, full stack data scientists deliver high-impact, relatively quickly because they’re connected to each step in the process and design of what they’re building." - Siphu Langeni, Data Scientist

"Full stack data science can be summed up by one word—ownership. As a data scientist you own a project end-to-end. You don't need to be an expert in every method, but you need to be familiar with what’s out there. This helps you identify what’s the best solution for what you’re solving for." - Yizhar (Izzy) Toren, Senior Data Scientist

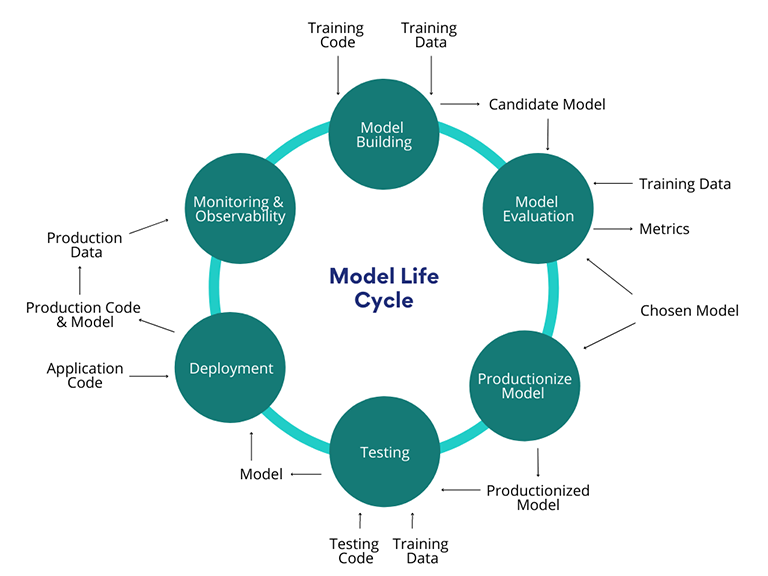

Typically, data science teams are organized to have different data scientists work on singular aspects of a data science project. However, a full stack data scientist’s scope covers a data science project from end-to-end, including:

- Discovery and analysis: How you collect, study, and interpret data from a number of different sources. This stage includes identifying business problems.

- Acquisition: Moving data from diverse sources into your data warehouse.

- Data modeling: The process for transforming data using batch, streaming, and machine learning tools.

What Skills Make a Successful Full Stack Data Scientist?

"Typically the problems you're solving for, you’re understanding them as you're solving them. That’s why you need to be constantly communicating with your stakeholders and asking questions. You also need good engineering practices. Not only are you responsible for identifying a solution, you also need to build the pipeline to ship that solution into production." - Yizhar (Izzy) Toren, Senior Data Scientist

"The most effective full stack data scientists don't just wait for ad hoc requests. Instead, they proactively propose solutions to business problems using data. To effectively do this, you need to get comfortable with detailed product analytics and developing an understanding of how your solution will be delivered to your users." - Sebastian Perez Saaibi, Senior Data Science Manager

Full stack data scientists are generalists versus specialists. As full stack data scientists own projects from end-to-end, they work with multiple stakeholders and teams, developing a wide range of both technical and business skills, including:

- Business acumen: Full stack data scientists need to be able to identify business problems, and then ask the right questions in order to build the right solution.

- Communication: Good communication—or data storytelling—is a crucial skill for a full stack data scientist who typically helps influence decisions. You need to be able to effectively communicate your findings in a way that your stakeholders will understand and implement.

- Programming: Efficient programming skills in a language like Python and SQL are essential for shipping your code to production.

- Data analysis and exploration: Exploratory data analysis skills are a critical tool for every full stack data scientist, and the results help answer important business questions.

- Data engineering: A full stack data scientist should have the engineering skills to build and deploy model pipelines to production.

- Machine learning: Machine learning is one of many tools a full stack data scientist can use to answer a business question or solve a problem, though it shouldn’t be the default. At Shopify, we’re proponents of starting simple, then iterating with complexity.

What’s the Benefit of Being a Full Stack Data Scientist?

“You get to choose how you want to solve different problems. We don't have one way of doing things because it really depends on what the problem you’re solving for is. This can even include deciding which tooling to use.”- Yizhar (Izzy) toren, Senior Data Scientist

“You get maximum exposure to various parts of the tech stack, develop a confidence in collaborating with other crafts, and become astute in driving decision-making through actionable insights.” - Siphu Langeni, Data Scientist

As a generalist, is a full stack data scientist a “master of none”? While full stack data scientists are expected to have a breadth of experience across the data science specialty, each will also bring additional expertise in a specific area. At Shopify, we encourage T-shaped development. Emphasizing this type of development not only enables our data scientists to hone skills they excel at, but it also empowers us to work broadly as a team, leveraging the depth of individuals to solve complex challenges that require multiple skill sets.

What Tips Do You Have for Someone Looking to Become a Full Stack Data Scientist?

“Full stack data science might be intimidating, especially for folks coming from academic backgrounds. If you've spent a career researching and focusing on building probabilistic programming models, you might be hesitant to go to different parts of the stack. My advice to folks taking the leap is to treat it as a new problem domain. You've already mastered one (or multiple) specialized skills, so look at embracing the breadth of full stack data science as a challenge in itself.” - Sebastian Perez Saaibi, Senior Data Science Manager

“Ask lots of questions and invest effort into gathering context that could save you time on the backend. And commit to honing your technical skills; you gain trust in others when you know your stuff!” - Siphu Langeni, Data Scientist

To sum it up, a full stack data scientist is a data scientist who:

- Focuses on solving business problems

- Is an owner that’s invested in an end-to-end solution, from identifying the business problem to shipping the solution to production

- Develops a breadth of skills that cover the full stack of data science, while building out T-shaped skills

- Knows which tool and technique to use, and when

If you’re interested in tackling challenges as a full stack data scientist, check out Shopify’s career page.

Shopify Data’s Guide To Opportunity Sizing

For every initiative that a business takes on, there is an opportunity potential and a cost—the cost of not doing something else. But how do you tangibly determine the size of an opportunity?

Opportunity sizing is a method that data scientists can use to quantify the potential impact of an initiative ahead of making the decision to invest in it. Although businesses attempt to prioritize initiatives, they rarely do the math to assess the opportunity, relying instead on intuition-driven decision making. While this type of decision making does have its place in business, it also runs the risk of being easily swayed by a number of subtle biases, such as information available, confirmation bias, or our intrinsic desire to pattern-match a new decision to our prior experience.

At Shopify, our data scientists use opportunity sizing to help our product and business leaders make sure that we’re investing our efforts in the most impactful initiatives. This method enables us to be intentional when checking and discussing the assumptions we have about where we can invest our efforts.

Here’s how we think about opportunity sizing.

How to Opportunity Size

Opportunity sizing is more than just a tool for numerical reasoning, it’s a framework businesses can use to have a principled conversation about the impact of their efforts.

An example of opportunity sizing could look like the following equation: if we build feature X, we will acquire MM (+/- delta) new active users in T timeframe under DD assumptions.

So how do we calculate this equation? Well, first things first, although the timeframe for opportunity sizing an initiative can be anything relevant to your initiative, we recommend an annualized view of the impact so you can easily compare across initiatives. This is important because when your initiative goes live, it can have a significant impact on the in-year estimated impact of your initiative.

Diving deeper into how to size an opportunity, below are a few methods we recommend for various scenarios.

Directional T-Shirt Sizing

Directional t-shirt sizing is the most common approach when opportunity sizing an existing initiative and is a method anyone (not just data scientists) can do with a bit of data to inform their intuition. This method is based on rough estimates and depends on subject matter experts to help estimate the opportunity size based on similar experiences they’ve observed in the past and numbers derived from industry standards. The estimates used in this method rely on knowing your product or service and your domain (for example, marketing, fulfillment, etc.). Usually the assumptions are generalized, assuming overall conversion rates using averages or medians, and not specific to the initiative at hand.

For example, let’s say your Growth Marketing team is trying to update an email sequence (an email to your users about a new product or feature) and is looking to assess the size of the opportunity. Using the directional t-shirt sizing method, you can use the following data to inform your equation:

- The open rates of your top-performing content

- The industry average of open rates

Say your top-performing content has an open rate of five percent, while the industry average is ten percent. Based on these benchmarks, you can assume that the opportunity can be doubled (from five to ten percent).

This method offers speed over accuracy, so there is a risk of embedded biases and lack of thorough reflection on the assumptions made. Directional t-shirt sizing should only be used in the stages of early ideation or sanity checking. Opportunity sizing for growth initiatives should use the next method: bottom-up.

Bottom-Up Using Comparables

Unlike directional t-shirt sizing, the bottom-up method uses the performance of a specific comparable product or system as a benchmark, and relies on the specific skills of a data scientist to make data-informed decisions. The bottom-up method is used to determine the opportunity of an existing initiative. The bottom-up method relies on observed data on similar systems, which means it tends to have a higher accuracy than directional t-shirt sizing. Here are some tips for using the bottom-up method:

1. Understand the performance of a product or system that is comparable.

To introduce any enhancements to your current product or system, you need to understand how it’s performing in the first place. You’ll want to identify, observe and understand the performance rates in a comparable product or system, including the specifics of its unique audience and process.

For example, let’s say your Growth Marketing team wants to localize a new welcome email to prospective users in Italy that will go out to 100,000 new leads per year. A comparable system could be a localized welcome email in France that the team sent out the prior year. With your comparable system identified, you’ll want to dig into some key questions and performance metrics like:

- How many people received the email?

- Is there anything unique about that audience selection?

- What is the participation rate of the email?

- What is the conversion rate of the sequence? Or in other words, of those that opened your welcome email, how many converted to customers?

Let’s say we identified that our current non-localized email in Italy has a click through rate (CTR) of three percent, while our localized email in France has a CTR of five percent over one year. Based on the metrics of your comparable system, you can identify a base metric and make assumptions of how your new initiative will perform.

2. Be clear and document your assumptions.

As you think about your initiative, be clear and document your assumptions about its potential impact and the why behind each assumption. Using the performance metrics of your comparable system, you can generate an assumed base metric and the potential impact your initiative will have on that metric. With your base metric in hand, you’ll want to consider the positive and negative impacts your initiative may have, so quantify your estimate in ranges with an upper and lower bound.

Returning to our localized welcome email example, based on the CTR metrics from our comparable system we can assume the impact of our Italy localization initiative: if we send out a localized welcome email to 100,000 new leads in Italy, we will obtain a CTR between three and five percent (+/- delta) in one year. This is based on our assumptions that localized content will perform better than non-localized content, as seen in the performance metrics of our localized welcome email in France.

3. Identify the impact of your initiative on your business’ wider goals.

Now that you have your opportunity sizing estimate for your initiative, the next question that comes to mind is “what does that mean for the rest of your business goals?”. To answer this, you’ll want to estimate the impact on your top-line metric. This enables you to compare different initiatives with an apples-to-apples lens, while also avoiding the tendency to bias to larger numbers when making comparisons and assessing impact. For example, a one percent change in the number of sessions can look much bigger than a three percent change in the number of customers which is further down the funnel.

Returning to our localized welcome email example, we should ask ourselves how an increase in CTR impacts our topline metric of active user count? Let’s say that when we localized the email in France, we saw an increase of five percent in CTR that translated to a three percent increase in active users per year. Accordingly, if we localize the welcome email in Italy, we may expect to get a three percent increase which would translate to 3,000 more active users per year.

Second order thinking is a great asset here. It’s beneficial for you to consider potential modifiers and their impact. For instance, perhaps getting more people to click on our welcome email will reduce our funnel performance because we have lower intent people clicking through. Or perhaps it will improve funnel performance because people are better oriented to the offer. What are the ranges of potential impact? What evidence do we have to support these ranges? From this thinking, our proposal may change: we may not be able to just change our welcome email, we may also have to change landing pages, audience selection, or other upstream or downstream aspects.

Top-Down

The top-down method should be used when opportunity sizing a new initiative. This method is more nuanced as you’re not optimizing something that exists. With the top-down method, you’ll start by using a larger set of vague information, which you’ll then attempt to narrow down into a more accurate estimation based on assumptions and observations. 2

Here are a few tips on how to implement the top-down method:

1. Gather information about your new initiative.

Unlike the bottom-up method, you won’t have a comparable system to establish a base metric. Instead, seek as much information on your new initiative as you can from internal or external sources.

For example, let’s say you’re looking to size the opportunity of expanding your product or service to a new market. In this case, you might want to get help from your product research team to gain more information on the size of the market, number of potential users in that market, competitors, etc.

2. Be clear and document your assumptions.

Just like the bottom-up method, you’ll want to clearly identify your estimates and what evidence you have to support them. For new initiatives, typically assumptions are going to lean towards being more optimistic than existing initiatives because we’re biased to believe that our initiatives will have a positive impact. This means you need to be rigorous in testing your assumptions as part of this sizing process. Some ways to test your assumptions include:

- Using the range of improvement of previous initiative launches to give you a sense of what's possible.

- Bringing the business case to senior stakeholders and arguing your case. Often this makes you have to think twice about your assumptions.

You should be conservative in your initial estimates to account for this lack of precision in your understanding of the potential.

Looking at our example of opportunity sizing a new market, we’ll want to document some assumptions about:

- The size of the market: What is the size of the existing market versus the new market size. You can gather this information from external datasets. In the absence of data on a market or audience, you can also make assumptions based on similar audiences or regions elsewhere.

- The rate at which you think you can reach and engage this market: This includes the assumed conversion rates of new users. The conversion rates may be assumed to be similar to past performance when a new channel or audience was introduced. You can use the tips identified in the bottom-up method.

3. Identify the impact of your initiative on your business’ wider goals.

Like the bottom-up method, you need to assess the impact your initiative will have on your business’ wider goals. Based on the above example, what does the assumed impact of our initiative mean in terms of active users?

And there you have it! Opportunity sizing is a worthy investment that helps you say yes to the most impactful initiatives. It’s also a significant way for data science teams to help business leaders prioritize and steer decision-making. Once your initiative launches, test to see how close your sizing estimates were to actuals. This will help you hone your estimates over time.

Next time your business is outlining its product roadmap, or your team is trying to decide whether it’s worth it to take on a particular project, use our opportunity sizing basics to help identify the potential opportunity (or lack thereof).

Dr. Hilal is the VP of Data Science at Shopify, responsible for overseeing the data operations that power the company’s commercial and service lines.

Wherever you are, your next journey starts here! If building systems from the ground up to solve real-world problems interests you, our Engineering blog has stories about other challenges we have encountered. Intrigued? Visit our Data Science & Engineering career page to find out about our open positions. Learn about how we’re hiring to design the future together—a future that is Digital by Design.

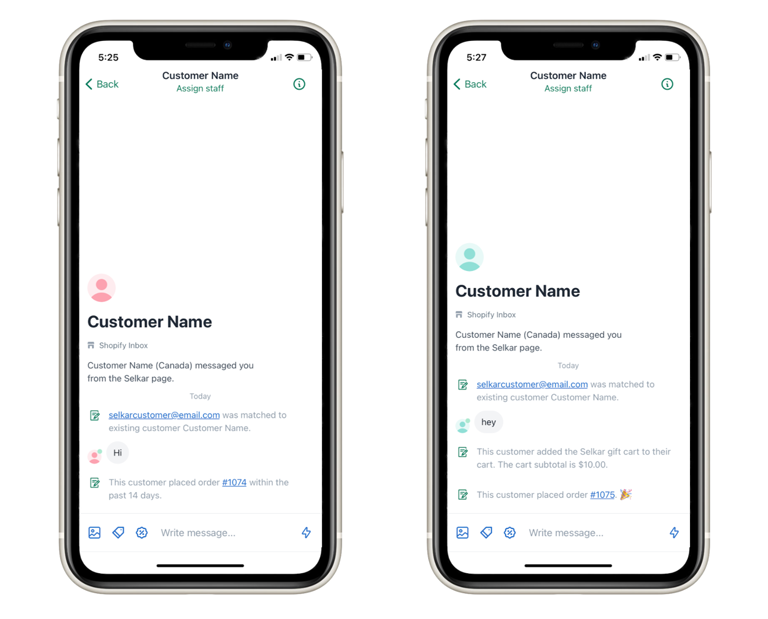

Data-Centric Machine Learning: Building Shopify Inbox’s Message Classification Model

By Eric Fung and Diego Castañeda

Shopify Inbox is a single business chat app that manages all Shopify merchants’ customer communications in one place, and turns chats into conversions. As we were building the product it was essential for us to understand how our merchants’ customers were using chat applications. Were they reaching out looking for product recommendations? Wondering if an item would ship to their destination? Or were they just saying hello? With this information we could help merchants prioritize responses that would convert into sales and guide our product team on what functionality to build next. However, with millions of unique messages exchanged in Shopify Inbox per month, this was going to be a challenging natural language processing (NLP) task.

Our team didn’t need to start from scratch, though: off-the-shelf NLP models are widely available to everyone. With this in mind, we decided to apply a newly popular machine learning process—the data-centric approach. We wanted to focus on fine-tuning these pre-trained models on our own data to yield the highest model accuracy, and deliver the best experience for our merchants.